The “just do questions” mantra is one of the laziest and most misleading pieces of advice in board prep culture.

You’ve heard it on Reddit, in GroupMe chats, from that one PGY-1 who barely remembers what UWorld looks like: “Bro, don’t overthink it. Just do questions and you’ll be fine.”

Here’s the problem: the students who survive with a pure “just do questions” approach are usually the ones who would’ve passed no matter what they did. Survivorship bias dressed up as wisdom.

Let’s pull this apart properly.

Where “Just Do Questions” Comes From — And Why It Half Works

There is a grain of truth here, which is why this myth refuses to die.

Active practice with board-style questions is powerful. Way more powerful than re-reading First Aid twelve times or watching passive videos into the night while your brain quietly exits your skull.

Boards-style questions give you three things:

- Retrieval practice: you’re forced to pull information from memory, which strengthens it.

- Context: you see how topics are actually tested (not how you wish they’d be tested).

- Feedback: you get immediate correction and usually an explanation.

Educational research backs this up. Repeated testing and retrieval practice outperform re-reading and highlighting for long-term retention by a wide margin.

So yes, “do questions” is the right direction.

But “just do questions” as a complete strategy? As in: no structured review, no spaced repetition, no plan, no content repair?

That is fantasy.

What the Data Actually Shows About Question Banks

Let me be concrete.

Multiple studies on USMLE Step 1 and Step 2 performance show:

- Use of question banks (UWorld, AMBOSS, etc.) is consistently associated with higher scores.

- But not just “having” a QBank. Completion and engaged review matter.

- Students who use questions as a diagnostic + learning tool outperform those who treat them like a box-checking contest.

The big gap is this: almost zero serious data supports the idea that “questions alone” without deliberate review and content reinforcement is optimal.

What actually predicts improvement?

Things like:

- How thoroughly explanations are reviewed.

- Whether wrong answers lead to targeted content repair.

- Whether students use spaced repetition (Anki/flashcards) to keep that knowledge alive.

- How early and consistently they start doing questions.

The “I did 8,000 questions, I’m good” flex leaves out one detail: how much of that translated into retained, usable knowledge on test day.

| Category | Question Practice | Explanation Review | Targeted Content Review | Random Low-Yield Stuff |

|---|---|---|---|---|

| High Scorers | 45 | 25 | 20 | 10 |

| Low Scorers | 55 | 10 | 15 | 20 |

Among higher performers, question practice is central—but it is integrated with explanation review and targeted content work. Lower performers often over-index on raw question volume and under-invest in what actually converts those questions into long-term knowledge.

The Four Big Myths Behind “Just Do Questions”

Let me go after the main myths directly.

Myth 1: “If I finish all of UWorld I’m automatically safe”

I’ve watched this play out dozens of times:

Student A: finishes 100% of UWorld, random timed, 50–60% correct, reviews a handful of explanations, “doesn’t have time” for missed topics, maybe glances at First Aid.

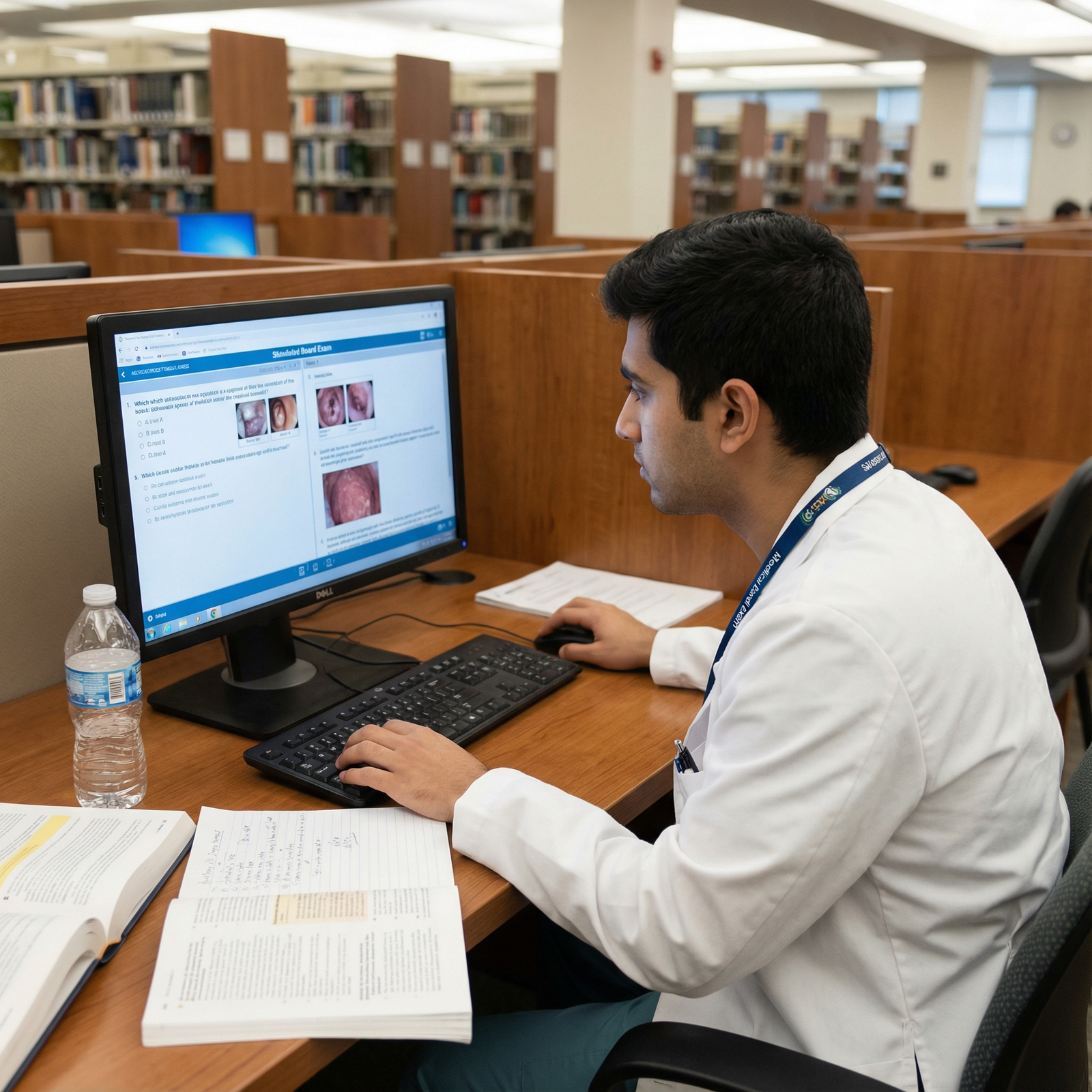

Student B: does 60–70% of UWorld, slower pace, deeply reviews every explanation, writes or updates Anki cards on missed concepts, ties questions back into structured content.

Student B almost always walks into the exam with a more stable knowledge base and less panic, even with fewer questions completed.

Finishing a QBank is not an achievement if you learned almost nothing from half the questions.

Myth 2: “Questions will teach me all the content I need”

No. They won’t.

Question banks are assessment tools first, and teaching tools second. They’re great at exposing weaknesses, decent at providing applied explanations, and terrible at giving you a clean, coherent overview of a topic from scratch.

Classic scenario I’ve seen repeatedly:

- Student bombs endocrine questions.

- Response: “I’ll just do more endocrine questions.”

- Result: they keep seeing fragmented bits of thyroid, adrenal, pituitary, but never sit down and map the full hormonal axes, feedback loops, and classic pathologies.

Questions can refine and stress-test knowledge you more or less already have. They are inefficient as your only teaching tool.

If you never pause to say, “Okay, I clearly don’t understand nephrotic vs nephritic properly; let me step away and fix the architecture,” your question performance plateaus. Then you blame the test. Or the QBank. Or “tricky wording.”

No. You built a knowledge house out of question fragments and hoped it would magically become a blueprint.

Myth 3: “More questions = higher score, linearly”

Once you hit a certain point, mindlessly adding more questions gives you diminishing returns.

What changes score is not raw volume. It’s:

- Coverage: did you touch all the primary systems and disciplines?

- Depth: did you understand why each option was right or wrong?

- Repair: did you actually fix recurring weaknesses?

I’ve seen people do 7,000+ questions between QBanks and still get wrecked because their histogram of performance never changed: renal, endocrine, biostats, ethics—weak the entire way through, never systematically rebuilt.

Meanwhile, another student does 3,000–4,000 questions with ruthless, structured review and jumps from 210-level performance to 245+.

More questions are only better if the learning per question stays high. For many students, after fatigue sets in, it nosedives.

Myth 4: “I’ll absorb patterns and that’s enough, content gaps don’t matter”

Pattern recognition helps. It’s real. But boards are increasingly written to break simple pattern-matching.

Vignettes are longer, more layered, and often force you to integrate multiple steps:

- Recognize the disease.

- Choose the next best step in management.

- Or pick the correct diagnostic test.

- Or anticipate a complication.

You cannot “vibes” your way through that if your underlying understanding is thin.

Pattern recognition is a multiplier. It multiplies a foundation. If the base is weak, you’re just guessing at nicer patterns.

What “Doing Questions Right” Actually Looks Like

Let me rewrite the slogan so it’s not dumb:

Not “just do questions.”

More like: “Do questions, then interrogate them until they teach you something permanent.”

When questions become your core, not your only, strategy, things change.

Here’s the difference between treating QBank as a checkbox and as an engine for learning.

| Step | Description |

|---|---|

| Step 1 | Do 40 Timed Questions |

| Step 2 | Review All Explanations |

| Step 3 | Identify Concept Gap |

| Step 4 | Brief Targeted Content Review |

| Step 5 | Create/Update Flashcards |

| Step 6 | Note Patterns & Pitfalls |

| Step 7 | Spaced Repetition Later |

| Step 8 | Wrong or Low-Confidence? |

The important part is what happens after you click “End Block.”

The students who improve the most:

- Spend as much (or more) time on review as on doing the block.

- Look beyond the correct answer and ask: “Can I explain why each wrong answer is wrong?”

- Track repeated misses by topic and then go fix that specific topic deliberately.

- Turn missed concepts into flashcards or concise notes they will actually see again before the exam.

This is not busywork. This is how your brain turns a one-time mistake into a durable memory.

Where “Just Do Questions” Completely Fails Different Types of Students

Let me be harsh for a second: for some people, “just do questions” isn’t just suboptimal, it’s a setup for failure.

If your baseline content is weak

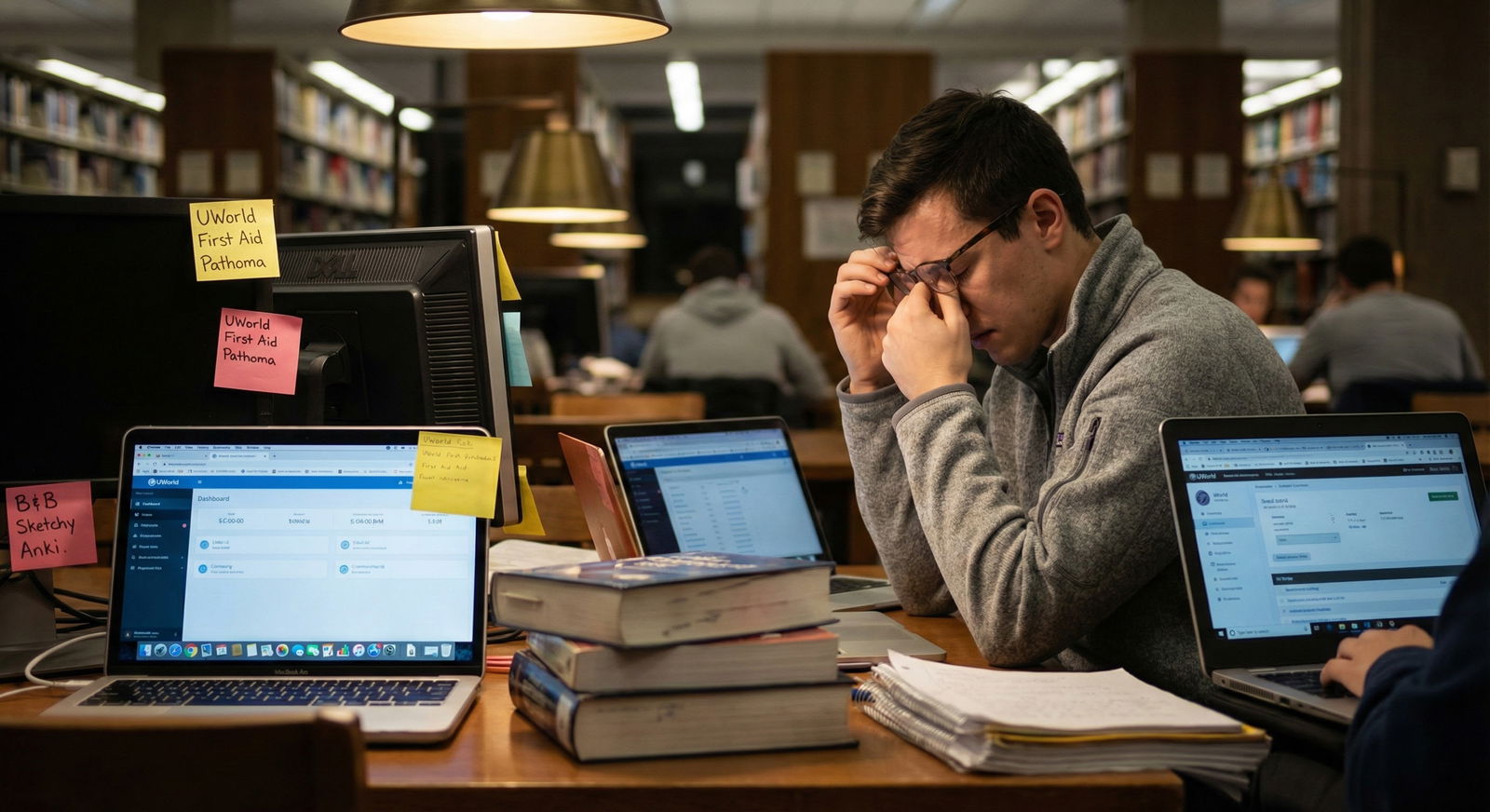

If you barely passed systems courses, or if large chunks of path, pharm, and physiology feel foggy, a pure question-based strategy becomes miserable:

- You’ll get slammed with low percentages.

- Your stress will spike.

- You’ll avoid hard topics subconsciously because they “feel bad.”

- You’ll learn some things, but painfully slowly and haphazardly.

You need scaffolding: a structured high-yield content review (Boards & Beyond, Pathoma, Sketchy, whatever your preference) at least for your weakest systems, integrated with questions—not replaced by them.

If you struggle with test anxiety or timing

“Just do questions” people often skip deliberate strategy work:

- How to triage long stems.

- How to avoid rereading entire vignettes in panic.

- How to mark and move intelligently.

Question volume helps desensitize you to the format, which is good. But if you never step back to analyze how you’re approaching questions, your efficiency doesn’t change much.

I’ve sat with students who consistently mismanage time. Their solution was “I’ll do more questions.” That’s like saying, “I’ll just run more marathons and hope my form magically corrects itself.”

If you’re aiming for a competitive specialty

A bare-minimum “I passed using questions and vibes” strategy is fine if your only goal is “pass Step 2 and match somewhere in FM or IM without caring much where.”

If you’re trying to break 250+ or gun for derm, ortho, plastics, ophtho, ENT?

Everyone in that pool is already doing questions. Volume doesn’t differentiate you. Quality of learning per question does.

The Hybrid Strategy That Actually Holds Up

Let’s be practical. What does a sane, evidence-aligned, question-centered but not question-only approach look like?

No two students are identical, but the rough structure that works again and again looks something like this:

Early Phase (pre-dedicated, during systems)

- Smaller daily dose of questions (e.g., 10–20 related to current block).

- Immediate review with short bursts of content reinforcement.

- Parallel spaced repetition (Anki or similar) for core facts.

Ramp-Up Phase (pre-dedicated to early dedicated)

- Increase to ~40–60 questions/day.

- Mix of system-based (to patch weaknesses) and random (to build integration).

- For every block:

- Full explanation review.

- Label questions: “content gap,” “careless,” or “test-taking error.”

- Address content gaps with short, targeted reviews—not 6-hour video binges.

Late Phase (last 2–3 weeks before exam)

- More random blocks in timed exam-like conditions.

- Shorter but still serious review with emphasis on patterns, last-minute weak spots.

- Heavy reliance on your own notes/flashcards made from prior misses.

Here’s how that differs from “just do questions”: there is intentionality, feedback loops, and retention planning.

| Aspect | Just Do Questions | Question-Centered Hybrid |

|---|---|---|

| Primary focus | Raw question volume | Learning per question |

| Explanation review | Fast, often skipped | Systematic, prioritized |

| Content gaps | Repeatedly exposed, rarely repaired | Identified, then patched with focused review |

| Spaced repetition | Usually absent | Built from missed concepts |

| Stress trajectory | Often high and chaotic | Still stressful, but more controlled |

How to Tell If “Just Doing Questions” Is Failing You

You don’t need a complicated dashboard to know if your approach is trash. A few red flags:

- Your cumulative QBank performance is flat or dropping for weeks.

- You keep missing the same topics (biostats, renal, endocrine, ethics) with no real plan to repair them.

- You “review” explanations by skimming the green text and moving on.

- You can’t teach a missed concept to a friend the following day without re-reading the explanation.

- Your NBME/COMSAE scores don’t budge despite grinding more and more blocks.

If that’s you, more questions won’t save you. Better learning from questions might.

| Category | Volume-Only Question Approach | Hybrid Question + Targeted Review |

|---|---|---|

| Week 1 | 210 | 210 |

| Week 3 | 212 | 218 |

| Week 5 | 213 | 226 |

| Week 7 | 214 | 234 |

| Week 9 | 214 | 241 |

The Social Problem: Why This Bad Advice Survives

There’s a cultural reason “just do questions” won’t die.

It sounds simple. It feels reassuring. It lets upperclassmen and residents compress their messy, complex memory of studying into a single sentence.

Also: nobody likes admitting how much structured work they actually did. People rewrite their own history as time passes.

You’ll hear: “I just did UWorld and skimmed First Aid.”

Reality (if you rewind the tape) was probably:

- Strong preclinical grades.

- Months of light integration of Anki + block questions.

- One or two QBanks with real review.

- Multiple NBME forms and course exams that forced them to learn along the way.

Take all post-hoc, oversimplified advice with a grain of salt. Especially if it fits neatly into a four-word slogan.

If You Remember Nothing Else

Three points.

- “Just do questions” is directionally right but dangerously incomplete. Questions should be the backbone, not the entire body, of your board strategy.

- What drives score is not sheer question count, but how deeply you learn from each block—through explanation review, targeted content repair, and spaced repetition.

- If your QBank performance and practice scores are flat despite grinding, you do not need more questions. You need a smarter, structured way to convert each question into durable understanding.

Use questions. A lot of them. But stop pretending they’re magic on their own.