Most people wildly overestimate what clinical NLP can do with oncology notes.

They imagine a magic box that reads messy clinic letters and spits out perfect staging, trial eligibility, and prognostic scores. That is fantasy. The real picture is more interesting, more limited, and—if you understand the boundaries—actually very powerful.

Let me break this down specifically, from the perspective of what these tools can and cannot reliably extract today, and what is coming next.

1. The Reality Check: What “Clinical NLP in Oncology” Actually Means

When vendors say “AI that understands oncology notes,” they are usually doing some combination of:

- Named entity recognition (NER): pulling out drugs, cancer types, genes, measurements.

- Relation extraction: linking entities (e.g., lung cancer → stage IV; trastuzumab → HER2+).

- Temporal reasoning: constructing a loose timeline (diagnosed in 2018, recurrence in 2021).

- Document classification: labeling notes (new consult, follow‑up, survivorship, palliative).

The raw material is ugly: dictated notes, copy‑pasted impressions, scanned PDFs, outside reports half‑OCR’d, pathology cut‑and‑pastes with formatting destroyed. Clinical NLP lives in that mess.

So the key question is not “can it read notes?” The real questions are:

- What signal is reliably present in the text?

- How structured is that signal?

- How much clinical nuance is required to interpret it correctly?

Oncology is especially challenging because so much hinges on nuance: borderline resectable, oligometastatic, suspicious for progression, clinical vs pathologic stage. That nuance is where current tools break.

2. What These Tools Do Well – The “Can Extract” Bucket

There are areas where clinical NLP in oncology is genuinely strong and very usable today, assuming you have a decent deployment (not some toy demo).

2.1 Basic Cancer Identification and Site/Histo Mapping

Pulling out that a patient has “invasive ductal carcinoma of the breast” or “adenocarcinoma of the right upper lobe of lung” is now fairly standard.

Most modern systems can:

- Identify primary cancer site (breast, lung, colon, prostate, ovarian, etc.).

- Differentiate primary vs metastasis mentions with decent accuracy.

- Map text to standardized vocabularies (ICD‑O, SNOMED, sometimes even OncoTree).

You will see rules like:

- “primary” + site

- “metastatic to” / “mets to”

- “likely primary in X” / “primary unknown”

In practice, for large‑scale registry enrichment or population dashboards, this works well enough. Will it misclassify a tricky CUP (cancer of unknown primary) case? Yes. But for 5,000‑patient analytics or approximate cohorting, it is “good enough.”

2.2 Staging Elements – Not Full Staging, but Pieces

Tools are increasingly competent at extracting components of staging, not perfectly calculating a TNM stage themselves.

They can often pull:

- T, N, M codes explicitly when documented (“T2N1M0”).

- Tumor measurements (“2.3 cm mass in upper outer quadrant”).

- Node involvement (“4/12 axillary lymph nodes positive”).

- Distant metastasis mentions (“hepatic metastases present,” “osseous mets in spine”).

Where you get value:

- Backfilling incomplete cancer registries.

- Flagging obviously metastatic disease for palliative care triggers.

- Pre-populating clinical trial eligibility screens with likely stage categories.

Where it breaks:

- Subtle distinctions like “clinical N1” vs “pathologic N1”.

- Cases where the staging system changed over time (AJCC 7 vs AJCC 8).

- Combined staging logic (e.g., grade, biomarkers altering stage group).

So: reliable extraction of ingredients, not the final dish.

2.3 Therapies: Chemotherapy, Immunotherapy, Targeted Agents

Medication extraction is one of the clear wins.

Modern oncology NLP systems handle:

- Drug names (generic and brand): pembrolizumab / Keytruda, osimertinib / Tagrisso.

- Regimens: “FOLFOX”, “R‑CHOP”, “AC‑T”, “FOLFIRINOX”.

- Route and frequency patterns: “q3w”, “every 2 weeks”, “continuous oral daily”.

- Line of therapy cues in explicit language: “second‑line”, “adjuvant”, “neoadjuvant”.

| Category | Value |

|---|---|

| Cancer site | 92 |

| Drug names | 95 |

| Regimens | 88 |

| Stage elements | 78 |

| Toxicities | 70 |

Those accuracy numbers are roughly what I see in solid implementations: high 80s to mid‑90s for clear, explicit mentions; lower for nuance.

Where this is incredibly useful:

- Building longitudinal therapy timelines per patient.

- Estimating real‑world treatment patterns (how many got FOLFOX vs CAPOX).

- Surveillance of off‑label use or concordance with guidelines.

Tools struggle more with:

- Abbreviated or non‑standard regimen nicknames in local practice.

- Legacy therapies or trial‑only regimens not in training data.

- Distinguishing “considering FOLFIRI” vs “initiated FOLFIRI.”

2.4 Some Biomarkers and Genomics (When Explicitly Stated)

If a note says:

“Tumor is ER 90%, PR 40%, HER2 IHC 3+; FISH positive.”

Most contemporary NLP systems can grab:

- ER/PR status and percentages.

- HER2 status (positive/negative/equivocal).

- PD‑L1 expression cutoffs (“TPS 50%”, “CPS 10”).

- Common mutations when written clearly: “EGFR exon 19 deletion,” “ALK rearrangement,” “BRAF V600E”.

Where it works best:

- Routine IHC markers in breast, gastric, lung.

- Single common actionables: EGFR, ALK, ROS1, BRAF, KRAS, NRAS.

Where it immediately drops off:

- Complex genomic panels with 20+ variants, CNVs, fusions.

- Ambiguous or technically worded NGS reports OCR’d from PDFs.

- Interpretations like “VUS in BRCA1” vs “pathogenic BRCA1 mutation.”

I have seen systems that do moderately well on FoundationOne or Caris‑style reports—but they are heavily customized and brittle. Change the report template and performance tanks.

2.5 Key Events and Temporal Anchors

Extracting events—diagnosis, surgery, radiation, recurrence, progression—by date is feasible if the documentation is semi‑sane.

Examples of what tools can usually pull:

- Initial diagnosis date (often from phrases like “diagnosed in March 2019”).

- Surgery dates and procedure types (“right mastectomy 07/12/2020”).

- Radiation courses (“completed adjuvant radiation Aug–Oct 2020”).

- First recurrence/progression mentions (“developed pulmonary recurrence in 2022”).

| Period | Event |

|---|---|

| Initial phase - 2018-03 | Diagnosis |

| Initial phase - 2018-04 | Surgery |

| Adjuvant therapy - 2018-05 | Chemotherapy start |

| Adjuvant therapy - 2018-09 | Chemotherapy end |

| Adjuvant therapy - 2018-10 | Radiation |

| Disease course - 2020-01 | First recurrence |

| Disease course - 2021-06 | Second-line therapy start |

This unlocks:

- Cohort building: “time from diagnosis to first recurrence.”

- Real‑world outcome metrics from routine care notes.

- Basic disease‑trajectory analytics.

Errors creep in when:

- Old history is recounted imprecisely (“had breast cancer many years ago…”).

- Multiple primaries exist (breast in 2010, colon in 2020).

- Oncologist’s note copies and rephrases prior events inconsistently.

Even then, for population‑level trends, the timelines are typically usable.

2.6 Document and Patient‑Level Classification

Classification is probably the least sexy but most reliable use:

- Note type: new consult vs follow‑up vs post‑op vs survivorship.

- Palliative intent vs curative intent (when explicitly stated).

- Active disease vs surveillance phase.

- Tumor board discussed vs not.

These are high‑value for workflow routing, registry flagging, or quality programs.

3. Where Clinical NLP Struggles – The “Cannot Reliably Extract” Bucket

Now the part people do not like to hear.

There are specific categories of oncology information that current NLP tools are very bad at, or at best inconsistent. If your vendor claims they do these perfectly from free‑text notes alone, be skeptical.

3.1 Nuanced, Composite TNM Staging and Stage Group

Can an NLP model pull “T2N1M0” when typed? Usually yes. Can it compute the AJCC stage group (Stage IIA vs IIB vs IIIA) across:

- Multiple notes

- Evolving staging manuals

- Clinical vs pathologic prefixes (cT, pN)

- Odd cases like ypT staging post‑neoadjuvant?

Not reliably.

The full stage group depends on:

- Tumor size, depth, invasion details.

- Number and location of nodes.

- Specific metastasis locations.

- Sometimes grade, molecular markers, or risk factors.

- Whether staging is clinical, pathologic, or post‑therapy.

NLP can extract many of the pieces, but assembling them consistently, at scale, across all tumor types and AJCC versions is fragile. I have seen systems that get staging decent in breast and colon, then fall apart in head and neck or sarcoma.

If you need regulatory‑grade staging (e.g., for a cancer registry or trial stratification), you still need structured fields and/or human validation. NLP is an assistant here, not an authority.

3.2 Implicit Line of Therapy and Treatment Intent

Explicit phrases like “first‑line treatment” or “adjuvant chemotherapy” are easy. The nightmare is when intent and line of therapy are implied.

Example:

“Patient initially received FOLFOX after resection, then upon progression in liver, started FOLFIRI plus bevacizumab.”

What does the system need to infer?

- That FOLFOX post‑resection = adjuvant, first‑line.

- That progression in liver marks failure of first‑line.

- That FOLFIRI + bevacizumab = second‑line, palliative.

Humans do that in a second because we integrate prior notes, imaging, and staging context subconsciously. NLP systems need explicit rules or huge amounts of labeled data, and even then break on edge cases:

- Reused regimens after long disease‑free intervals.

- Switches due to toxicity, not progression.

- Maintenance therapies after induction.

So: NLP gives a messy but useful approximation of treatment lines. Good for population analytics. Too noisy to fully trust for individual clinical decision making without review.

3.3 Prognosis, Goals‑of‑Care, and Patient Preferences

People keep trying to get NLP to “read” prognosis and goals of care from notes. Outcome: partial, brittle, high‑risk.

What it can spot reasonably:

- Explicit DNR/DNI orders mentioned in free‑text.

- “Hospice” or “transitioned to comfort care”.

- Phrases like “prognosis poor” or “limited life expectancy”.

What it cannot handle well:

- Nuanced conversations (“Patient understands seriousness but wishes to pursue aggressive treatment for now.”).

- Shifting preferences over time.

- Family‑driven vs patient‑driven wishes.

- Cultural context, ambivalence, or denial.

The biggest danger here is automation bias. A flag saying “palliative goals” based on one line in one note can be flat‑out wrong 3 months later. Good teams use these signals as soft triage, not absolute truth.

3.4 True Radiology and Pathology Understanding from Text Alone

Text reports for radiology and pathology are lossy summaries of rich, complex findings. NLP can:

- Extract named lesions, organs, sizes, and some qualifiers (“suspicious for”, “consistent with”).

- Identify pathologic features (“lymphovascular invasion present”, “positive margins”, “high grade”).

But two serious limitations:

Negation and uncertainty wreak havoc.

- “No evidence of liver metastases.”

- “Cannot exclude small peritoneal implants.”

- “Likely reactive nodes, malignancy less favored.”

Most systems do rule‑based negation and hedge detection, but borderline phrases (“not clearly seen”, “may represent”) are error-prone.

Global interpretation is weak.

Determining “overall radiologic progression” on subtle serial reports is hard even for radiologists. NLP reading only the impression paragraph will misclassify borderline cases.

For deep interpretation of imaging/path, you really need image‑level AI or structured synoptic reporting. Text‑only NLP will always be second‑rate here.

3.5 Toxicity Grading and Symptom Burden

Another popular ask: “Have the notes extract CTCAE‑grade toxicities and PROs.” Reality: partial, noisy.

What tools can often pick up:

- Obvious toxicities explicitly labeled (“grade 3 neutropenia”, “severe diarrhea”, “immune‑mediated hepatitis”).

- Classic symptom terms: nausea, vomiting, pain, neuropathy, fatigue.

What they rarely do well:

- Accurate grading based on functional language (“needs IV hydration”, “limited self‑care”, “bed‑bound”).

- Distinguishing treatment‑related vs disease‑related vs unrelated symptoms.

- Establishing causality and temporal linkage to a specific drug.

You may get reasonable signals for “serious toxicity event occurred here.” Granular CTCAE grading at scale from routine clinic prose? Not yet.

3.6 Fine‑Grained Trial Eligibility from Notes Alone

This is where sales slides become almost comical.

Full clinical trial eligibility extraction requires details like:

- Prior lines of specific therapies.

- Washout periods.

- Organ function labs at defined time windows.

- Specific metastasis patterns (e.g., no leptomeningeal disease).

- ECOG performance status at screening.

Text notes might mention pieces of this, but:

- Lab values are in structured fields, not text.

- ECOG is inconsistently documented (“doing OK”, “ambulates with cane”).

- Prior therapies are scattered across consult notes and infusion flowsheets.

NLP helps by:

- Listing known cancer type, stage, common mutations.

- Flagging obvious blockers (brain mets, prior CAR‑T, transplant).

- Summarizing treatment history roughly.

But no serious center relies on note‑only NLP to decide who is trial‑eligible. Best systems integrate structured data + NLP + human screening.

4. Why Oncology Notes Are Especially Hard for NLP

Oncology is not the easiest playground for clinical NLP. A few structural issues:

4.1 Heterogeneous Documentation Styles

Breast clinic at one academic center vs community GU oncologist vs heme malignancy at a tertiary center. Completely different templates, jargon, and habits.

Some examples I have actually seen:

- “cT2N1 ER+/HER2‑ IDC, s/p lumpectomy, adjuvant AC‑T ongoing.”

- “Right breast Ca, LN+, HR+, HER2‑; got chemo, now on hormone tabs.”

- “Malignant neoplasm R breast, stage 2, lumpectomy done; on pill.”

Same patient archetype. Three wildly different documentation styles.

NLP generalization suffers because it learns local patterns. When it moves to a new institution, performance drops until it is re‑tuned.

4.2 Concept Drift Over Time

Guidelines change. Terminology shifts. AJCC editions roll. Drugs come and go.

- “Triple‑negative” used to be rare language; now it is routine.

- HER2‑low is a new category with real impact. Five years ago, nobody talked about it.

- PD‑L1 assay terms evolved; older notes may say “PDL1 50%” without TPS/CPS distinction.

Any model trained on a static snapshot will slowly rot unless you maintain and retrain it with fresh data that reflects current practice.

4.3 Ambiguity and Hedge Language

Oncologists hedge constantly. For good reason.

You see phrases like:

- “Possible early progression.”

- “Findings may represent treatment effect versus recurrence.”

- “Borderline resectable disease.”

From a computational standpoint, that is poison. It is neither clearly present, nor clearly absent. Systems either drop these mentions or classify them inconsistently.

For safety, most commercial systems lean conservative: they will treat many hedged mentions as non‑events unless multiple notes confirm it. Which means early progression signals get missed.

5. Practical Use Cases That Actually Work Today

Let us get concrete. Here is where clinical NLP on oncology notes is worth the money right now.

| Use Case | Reliability Level | Primary Benefit |

|---|---|---|

| Registry backfill (site, stage) | Medium-High | Reduce manual abstraction burden |

| Treatment pattern analytics | High | Real-world evidence, outcomes |

| Trial pre-screening triage | Medium | Shortlist candidates for review |

| Palliative/hospice flags | Medium | Earlier supportive care referral |

| Quality metrics (e.g., adjuvant) | Medium-High | Measure guideline concordance |

| Tumor board case preparation | Medium | Summarize key history automatically |

Notice what is missing: fully automated individualized treatment decisions. Prognostic scoring from prose alone. Real‑time trial enrollment with no human in the loop. Those are still mostly science projects.

6. What Is Coming Next: Foundation Models and Multimodal Oncology

The field is not static. The arrival of large language models (LLMs) and multimodal architectures is starting to change the ceiling here—but in a way that still needs guardrails.

6.1 LLMs: Better Extraction, Same Hard Boundaries

Large clinical LLMs fine‑tuned on oncology corpora do substantially better at:

- Normalizing messy free text into structured fields.

- Handling synonymy and abbreviations.

- Doing light reasoning over multiple sentences.

You can prompt an LLM with:

“Extract for this patient: primary cancer, stage (if stated), major treatments with approximate start dates, and current status.”

And it will generate a decent summary. That is powerful for chart review and tumor board prep. It is also non‑deterministic, can hallucinate, and is hard to validate at population scale.

In production, most serious groups do hybrid systems:

- Deterministic, validated pipelines (regex + ML) for high‑value, high‑risk fields.

- LLMs in a “copilot” role for summaries, draft abstractions, and low‑risk exploration.

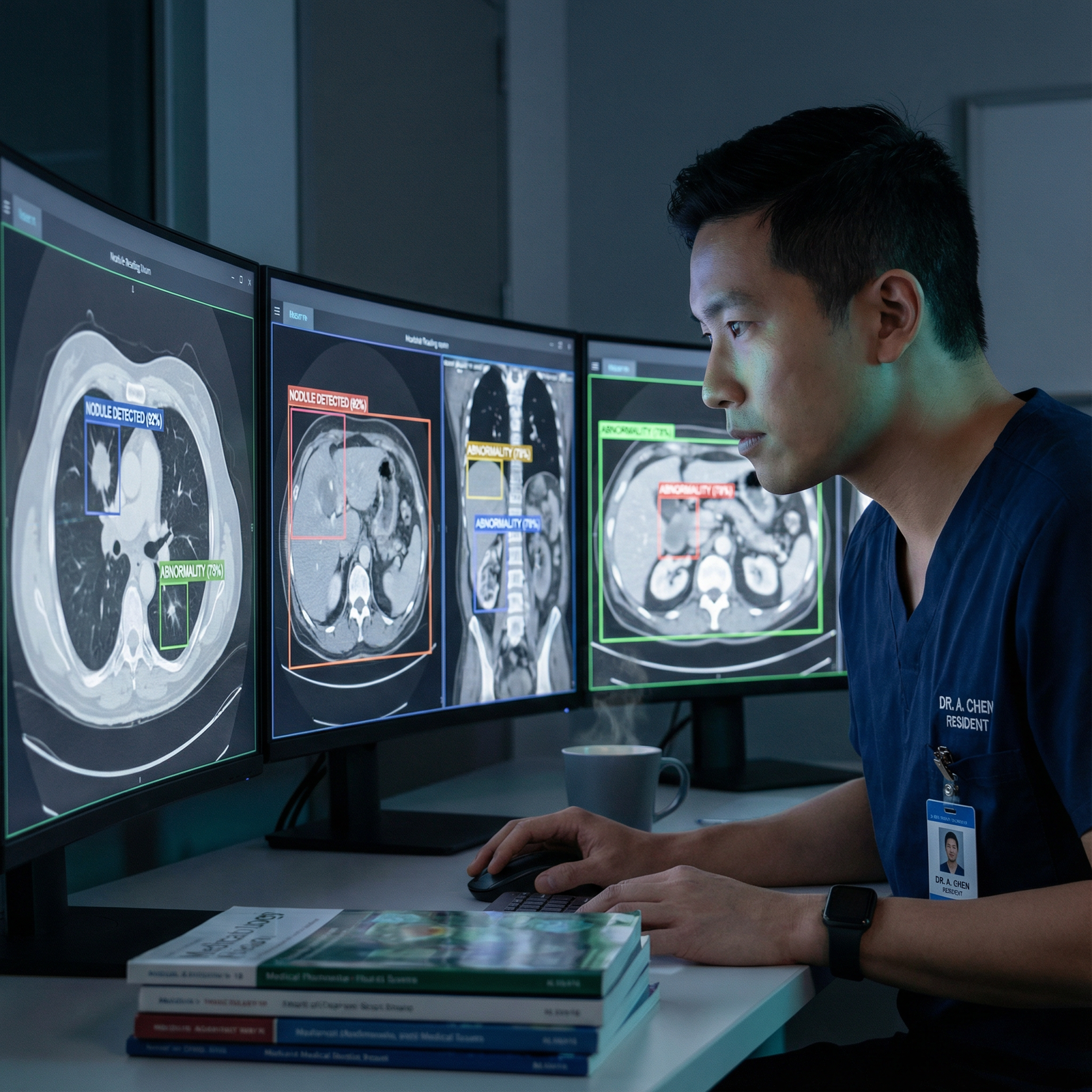

6.2 Multimodal: Notes + Labs + Imaging + Genomics

The real future of oncology NLP is not NLP alone. It is multimodal clinical AI, where text is one piece among:

- Structured EHR data (labs, meds, diagnoses).

- Imaging models reading CT/MRI/PET directly.

- Genomic interpreters parsing raw variant calls.

- Path AI reading digitized slides.

In that world, free text is:

- A source of nuance and context (why a therapy was changed).

- A backup when structured data are missing or wrong.

- A narrative that ties the other modalities together.

Timelines, staging, and trial eligibility will be computed from the combination of modalities, with NLP contributing but not carrying the whole weight.

6.3 Generative Documentation and Structured Templates

Another frontier: using AI to generate better notes in the first place.

If AI‑assisted documentation pushes oncologists toward:

- Synoptic templates for path and radiology.

- Structured staging fields auto‑filled then confirmed.

- Auto‑suggested treatment lines and intents to accept/modify.

Then future NLP has an easier job because the source text is more regular. The irony is that the more AI helps create the notes, the less “heroic” NLP needs to be to understand them.

7. How to Use These Tools Safely and Effectively

If you are in a cancer center, health system, or startup thinking about deploying clinical NLP on oncology notes, here is the blunt guidance.

Be precise about the question.

“We want insights from notes” is meaningless. “We want to estimate time from metastatic diagnosis to first immunotherapy” is concrete. NLP can help there.Partition fields into: must‑be‑right vs nice‑to‑have.

- Must‑be‑right: trial eligibility criteria, registry legal fields. These require human validation.

- Nice‑to‑have: research analytics, cohort exploration. Here you can accept 85–90% accuracy.

Validate locally.

Do not trust vendor AUCs from some other system. Sample 100–200 real charts from your oncology service lines. Audit performance. You will find quirks.Combine structured and unstructured data.

Pull line of therapy from orders + administration data, and use NLP only to clarify intent and context. Do not force text‑only inferencing when the structured fields already hold 80% of the answer.Keep a human in the loop for high‑stakes use.

Think of NLP as the resident who pre‑abstracts the chart. Helpful, time‑saving, sometimes wrong. You still sign the note.

FAQ (Exactly 6 Questions)

1. Can clinical NLP fully automate cancer registry abstraction from oncology notes?

No. It can significantly reduce the manual burden by pre‑populating fields like primary site, histology, and some staging components, but registry‑grade data still require human review. Especially for nuanced staging, multiple primaries, and complex treatment histories, manual validation remains essential.

2. How accurate are current NLP tools at identifying cancer stage from notes?

They are generally good at extracting explicit stage statements (“Stage IIIB non‑small cell lung cancer”) and components like T, N, and M when documented. Accuracy drops when the stage must be inferred from scattered descriptions, when AJCC rules changed over time, or when clinical vs pathologic staging is ambiguous. For population analytics, the performance is often acceptable; for individual patient decisions, it is not reliable enough as a sole source.

3. Can these tools figure out line of therapy (first‑line, second‑line, etc.) reliably?

They can approximate it, especially when clinicians explicitly write “second‑line” or “maintenance.” However, inferring lines purely from text—across long care histories with regimen switches, toxicity holds, and re‑challenges—is error‑prone. The best practice is to derive line of therapy primarily from structured treatment and disease status data, and use NLP for refinement, not as the only source.

4. Is it realistic to use NLP on notes to find patients eligible for clinical trials?

Yes, but only for pre‑screening, not final eligibility. NLP can rapidly narrow a population from thousands to a manageable short list by identifying cancer type, stage, common biomarkers, and obvious exclusion factors. Final determination still requires human review of structured labs, imaging, and the full chart because eligibility criteria are too detailed and variable to be captured reliably from free text alone.

5. How do large language models change the picture for oncology NLP?

LLMs improve the flexibility and coverage of extraction and can generate high‑quality longitudinal summaries from messy notes. They are particularly good as “copilots” for tumor board prep or research abstraction. However, they introduce new risks: non‑determinism, hallucination, and difficulty in rigorous validation. For now, they supplement but do not replace more constrained, validated extraction pipelines for high‑stakes tasks.

6. What should an oncology program prioritize first when adopting clinical NLP?

Start with high‑value, medium‑risk use cases: treatment pattern analytics, adjuvant therapy quality metrics, basic registry pre‑fill, or trial pre‑screening. Define narrow, measurable targets, integrate structured data from the EHR, and build a feedback loop with clinicians and registrars. Once those foundations are working and trusted, you can move toward more ambitious territory like semi‑automated staging support or AI‑assisted documentation.

With that progression, you end up with a system that does not pretend to “understand” oncology notes like a human, but that quietly takes hours of grunt work off your team’s plate. And once that foundation is stable, you are in a position to plug into the next generation of multimodal oncology AI. But that escalation is a story for another day.