Program directors are not secretly running every personal statement through ChatGPT detectors. That’s the myth. And it needs to die.

The panic around “AI-detection tools” in residency applications is wildly out of proportion to what’s actually happening. I’ve heard med students whisper about it in anatomy labs, on away rotations, in coffee lines at conferences: “My advisor said PDs are using detectors now.” Usually followed by some vague reference to “a friend” who “got flagged.”

Let’s put some structure and evidence around this instead of fear and rumors.

What PDs Actually Care About (Spoiler: Not Your Perplexity Score)

Most program directors care about three things in your personal statement:

- Does it make sense for this specialty and your application as a whole?

- Does it sound like a real human they’d want to work with?

- Does it avoid red flags (incoherence, grandiosity, obvious plagiarism, ethical disasters)?

They are not sitting there thinking: “Let me upload this to an AI-detection site and check the percentage.”

Why? Because:

- AI detectors are notoriously unreliable and easily fooled.

- They add work to an already overloaded process.

- They expose programs to risk if they make decisions based on basically junk science.

- There is no uniform policy across specialties or institutions to use them.

Have some individual PDs or coordinators experimented with AI-detection tools? Yes. I’ve heard that directly. But that’s very different from “everyone is doing it” or “you’ll get rejected if ChatGPT touched your draft.”

Right now, AI detectors are not a systematic, standardized, or dependable part of residency screening. They’re an occasional curiosity at best.

The Reality of AI Detection Tools: How Bad Are They, Actually?

Let’s be blunt: the current generation of AI-detection tools is garbage for high-stakes decisions.

Multiple independent tests have shown:

- They flag human-written text as “AI-generated” at a non-trivial rate.

- They can completely miss AI-generated text that’s lightly edited or rephrased.

- They’re biased against non-native English writers because those writers tend to use simpler, more predictable language.

There have been published cases in academia where students were accused of cheating based solely on detection tools—and the tools were wrong. Enough that many universities now explicitly warn faculty not to rely on them as primary evidence.

| Category | Value |

|---|---|

| False AI-flag on real human text | 20 |

| Missed detection on AI text | 25 |

That chart isn’t from a single definitive study—it reflects the general range of error you see when people actually test these tools on mixed corpora. The point is not the exact percentages. The point is: the error rates are high enough that no sane program director wants to base decisions on them.

So if a PD uses one of these tools, what are they really doing?

- Satisfying curiosity

- Maybe investigating something that already seemed very off

- Playing with a new toy, not implementing a selection policy

The nightmare scenario people imagine—“my solid, honest, edited statement gets flagged and I get auto-rejected”—doesn’t fit the reality of how programs work or what these tools can actually do.

What Programs Are Doing About AI (The Quiet, Boring Truth)

Here’s the piece no one wants to talk about: the people making residency application decisions barely have time to read your statement properly, much less run it through a bunch of tools.

Typical scenario I’ve seen and heard repeatedly from faculty and PDs:

- 800–1500 applications for 8–20 spots.

- Screening time per application measured in minutes, not half-hours.

- Heavy weighting on Step scores, clerkship grades, letters, school reputation, and any known connections (home students, rotators, referrals).

The personal statement?

- Often skimmed rapidly.

- Sometimes read more carefully only for interview invites or ranking, not initial filter.

- Sometimes not read at all unless something else about the application stands out.

Now layer AI paranoia on top of that. For a PD to use detectors systematically, they’d need:

- A standardized protocol (who runs it, on which apps, how data is stored)

- A threshold for “flagged” vs “not flagged”

- A committee-level or institutional policy on what to do when something is “flagged”

- Legal/ethical sign-off that they’re not discriminating or making decisions off unreliable pseudo-forensics

Most programs do not have the bandwidth or appetite for that. They’re barely keeping their heads above the flood of applications as it is.

What is happening more commonly:

- General awareness that AI exists and applicants are using it.

- Internal discussions about authenticity and how to judge fit.

- More focus on interviews, letters, and concrete behavior than on pristine narrative prose.

So yes, the ecosystem is adjusting to AI. But no, it’s not “full surveillance state with detectors on every paragraph.”

The Real “Detection” PDs Use: Human Pattern Recognition

Program directors do “detect” AI, but not with tools. With their brains.

This is what they actually notice:

Tone mismatch across materials

LORs describe you as reserved, quiet, technically strong. Your statement reads like a TED-talk guru who “revolutionizes every team environment” and “transforms patient care paradigms.” That kind of jarring mismatch raises eyebrows.Voice mismatch between PS and supplemental or ERAS entries

Your ERAS descriptions are simple, slightly awkward, straightforward. Your personal statement suddenly becomes hyper-polished, metaphor-heavy, and filled with complex structures you never use elsewhere. That’s a clue.Incoherent, generic content

Statements that read like they were assembled from stock phrases:- “Ever since I was a child, I have been fascinated by the human body…”

- “This experience cemented my desire to pursue [specialty]…”

- “…I will be a compassionate, hardworking, and dedicated resident.”

AI or not, this just signals low effort and zero self-awareness.

Content that doesn’t fit your actual trajectory

If your CV screams research-heavy neuro person and your statement is suddenly a Hallmark story about rural primary care with a single throwaway neuro mention, it feels disjointed. Whether that came from AI, a consultant, or a friend, the issue is the inconsistency.

None of this requires an AI detector. It requires reading like a clinician: pattern recognition, gestalt, and context.

| Step | Description |

|---|---|

| Step 1 | Read personal statement |

| Step 2 | Look for plagiarism/major inconsistencies |

| Step 3 | Compare tone with ERAS entries |

| Step 4 | Question authenticity, lower trust |

| Step 5 | Move on to letters & metrics |

| Step 6 | Any obvious red flags? |

| Step 7 | Big mismatch? |

This is the “detection system” you should actually care about. Because it works. And PDs have been doing it long before ChatGPT existed.

So Can You Use AI At All? Yes—But Not How Most People Are Using It

Let me be direct: blanket “never touch AI” advice is outdated and unrealistic. Many applicants are using AI in some capacity. PDs know this. They also know faculty themselves are using AI to draft emails, outlines, even parts of talks.

The line that matters isn’t “zero AI contact.” The line is: does the final product honestly reflect your thinking, your experiences, and your voice?

Here are ways to use AI that I consider low-risk and actually productive:

- Brainstorming themes or angles based on your experiences.

- Getting structure suggestions: intro–body–conclusion, flow, transitions.

- Cleaning up grammar and syntax at the end, especially if English is not your first language.

- Generating alternative phrasings when you’re stuck on one rigid sentence.

And here are “high-risk” uses that absolutely can get you into trouble, with or without detectors:

- Asking AI to “write my entire personal statement from scratch about wanting to do internal medicine” and submitting a lightly edited version.

- Letting AI invent patient stories or experiences that did not happen. That crosses from tool-assisted to unethical fabrication.

- Using AI to mimic someone else’s style (a faculty’s, a sample online, another applicant’s) instead of your own.

The main test: if a PD asked you, “Walk me through how you wrote this,” could you honestly describe the process without your stomach dropping? If not, you already know where you are on the spectrum.

What Will Happen If AI Use Becomes More Obvious?

Eventually, some version of the following will happen:

- Programs will accept that a good portion of personal statements had AI assistance.

- The weight of the PS will continue to shrink relative to interviews, SLOEs, and performance.

- Some specialties may update their policies or ERAS instructions about AI usage, but enforcement will be murky.

We’re already half-way there. In many competitive fields (derm, ortho, plastics), program directors care far more about who you’ve worked with, what they say about you, your concrete track record, and whether your story fits your CV than about whether every sentence was painstakingly word-smithed at 2 a.m.

The real shift won’t be “we’re scanning every statement for AI.” It will be:

- More prompt-based, short-answer style questions instead of one big personal statement.

- More in-person or virtual assessments (interviews, situational judgment tests) that are harder to AI-ify.

- Maybe, eventually, transparent expectations: “You may use tools to help with grammar, but the ideas and experiences must be your own.”

What you’re not going to see any time soon: universal, reliable AI-detection infrastructure used as a core gatekeeper. The technology is not there. The risk is too high. The incentives are wrong.

The Real Risks You Should Worry About (None of Them Are Detectors)

You’re obsessing over being “flagged by a tool” when you should be more worried about three very human things:

Sounding like everyone else

PDs complain constantly that every statement blurs together. Same template, same clichés, same Safe Answers. If AI pushes you further into beige, that hurts you.Writing something you can’t back up in person

If AI helps you write unusually complex sentences or emotional reflections that you’d never actually say out loud, you’re setting yourself up for awkward interviews. PDs don’t need detectors to sniff that out.Letting the tool flatten your actual story

AI is excellent at average. At generic. At “safe, plausible text.” But residency selection isn’t about awarding points for generic correctness. It’s about picking people whose actual path and personality fit the program.

| Risk | Actual Impact on Match Chances |

|---|---|

| Generic, cliché statement | High |

| Mismatch with CV/letters | High |

| Weak or vague reasoning | Medium–High |

| Light AI editing of grammar | Near-zero |

| Being 'detected' by a tool | Very low currently |

Focus on the first three. They’ve always mattered, and they still do.

How to Write in a Way That Survives Any AI Panic

If you want a practical north star, use this:

Write a statement that:

- You could explain line-by-line in an interview without hesitation.

- Your letter writers would recognize as consistent with the person they know.

- A friend who knows you well would say, “Yeah, this sounds like you—maybe a bit more polished.”

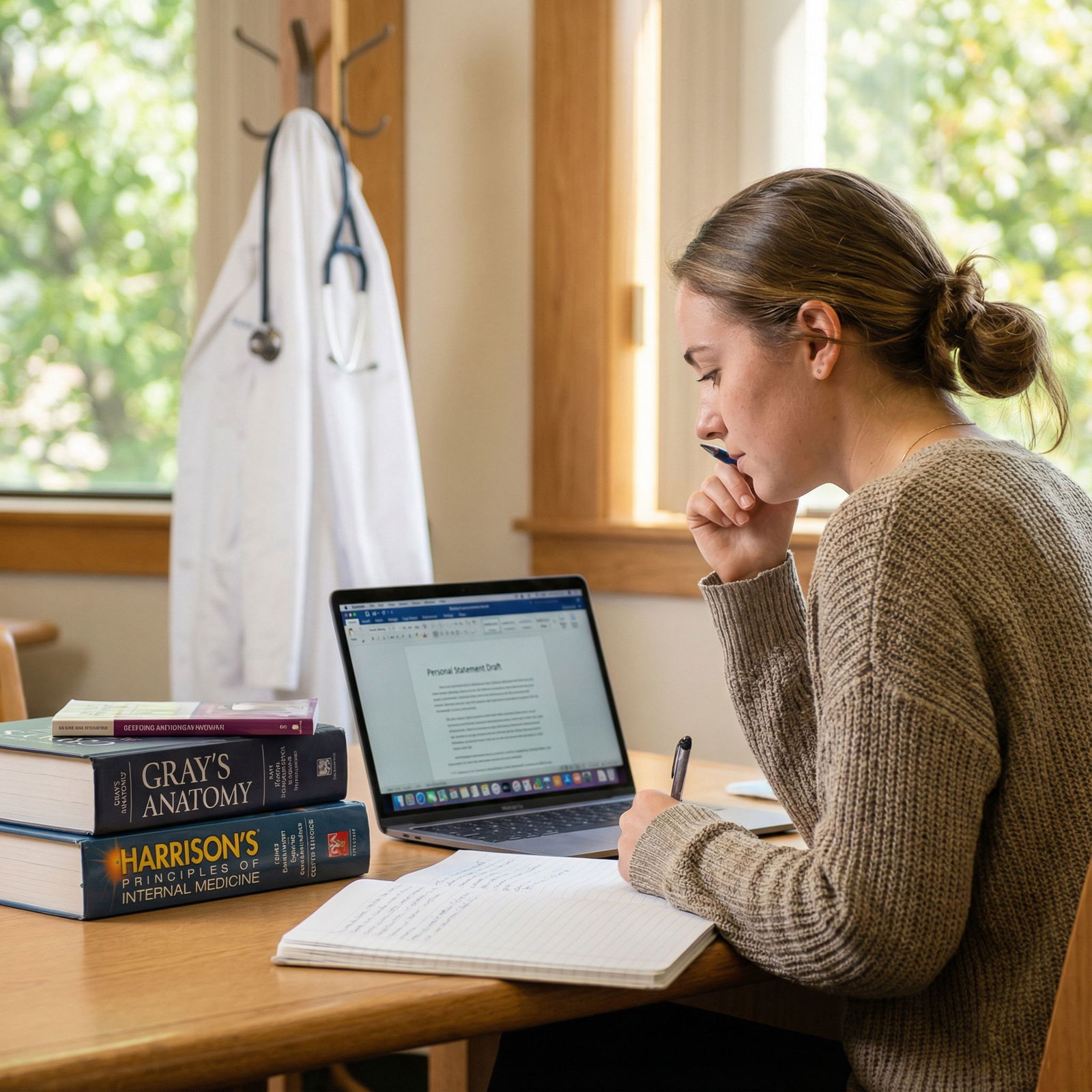

Process-wise, the safest, most effective pattern I’ve seen is:

- Start with bullet points of real experiences, not with the blank-page “personal statement” dread.

- Free-write a messy first draft in your own words—yes, it can be ugly.

- If you use AI, apply it later: for rephrasing clunky parts, improving transitions, tightening language.

- Then re-humanize it. Read it out loud. Strip out any phrasing you wouldn’t naturally use. Put back some imperfection. That’s your voice.

If you do that, even if some overzealous PD runs your statement through a toy detector for fun, the bigger picture of your application will still look coherent and honest. That’s what actually decides interviews and ranks.

FAQs

1. Could I really get rejected just because an AI detector flagged my statement?

Right now, that’s highly unlikely. There is no consistent, validated standard across programs, and most PDs know these tools are unreliable. If you’re rejected, it will almost always be due to the usual suspects: scores, letters, fit, institutional priorities—not a “95% AI-generated” label from some janky website.

2. Is it ethical to use AI to help with grammar or organization?

Yes, if the ideas, experiences, and core wording start from you. Using a tool to clean up grammar or help structure your thoughts is no more unethical than using Grammarly or asking a mentor to edit. It crosses the line when you let AI invent content, fabricate stories, or replace your actual thinking.

3. What if English isn’t my first language and AI makes my writing sound more fluent?

You’re exactly the kind of person these tools can help without penalty—if you’re honest with yourself about authorship. Use AI to clarify and polish what you’ve already written, not to generate generic personal statements from scratch. Programs know international and ESL applicants may write differently; they’re not out to punish you for using reasonable tools to be understood.