Hospitals do not adopt AI because it is innovative. They adopt it because somebody powerful is scared of being left behind—or scared of being blamed.

Once you understand that, the whole selection process suddenly makes sense.

I have sat in those rooms. I have watched a CMO kill a clearly superior tool because the vendor “felt small,” and I have watched a CIO force through a mediocre AI platform because the board chair saw it in a McKinsey slide deck. You will not hear this in vendor webinars or glossy case studies, but here is how the decisions really get made.

Let’s walk through what actually happens inside a hospital when an “AI solution” starts making noise at the door.

The Real Trigger: Why AI Even Gets on the Agenda

Nobody wakes up in hospital leadership saying, “Let’s thoughtfully evaluate AI this quarter.” The AI conversation usually starts for one of four reasons:

- A crisis metric is red.

- A competitor just announced something flashy.

- A vendor found the right champion and scared them.

- The board wants the word “AI” in the strategic plan.

| Category | Value |

|---|---|

| Operational crisis | 35 |

| Competitive pressure | 25 |

| Vendor-driven | 25 |

| Board/strategy push | 15 |

Here is what that looks like on the ground:

- The ICU is overflowing, length of stay is creeping up, readmission penalties are hitting margins. Suddenly an “AI discharge prediction engine” sounds very attractive.

- The health system across town just blasted the press with “first in the region” AI radiology or sepsis prediction. Your CEO immediately asks, “What are we doing in this space?”

- A vendor’s sales lead manages to corner the CMO at a conference: “We reduced sepsis mortality by 18% at a system just like yours.” That conversation turns into an internal email: “We should look at this.”

- A consulting firm presents a five-year strategic roadmap to the board. There is a slide titled “Digital Transformation and AI Enablement.” Now leadership needs something to point at.

Everything after that is theater framed as “objective evaluation.”

Who Actually Makes the Decision (And Who Just Thinks They Do)

You will see a lot of smiling faces around the table—CMO, CNO, CIO, CMIO, maybe a COO, a few key service line chiefs, quality, IT security, finance. But not all of them matter equally.

Let me translate the roles the way they actually function.

- The C‑suite decider: Usually the CMO or CIO, sometimes the COO. They carry the political risk and will get the angry call if it fails. Their fear level drives 70% of the decision.

- The influencer: CMIO / CNIO / service line chief (radiology, ED, ICU, oncology). If they are excited and loud, they can carry a mediocre AI vendor over the finish line.

- The necessary obstacles: IT security, compliance, legal, data governance. They rarely push things forward; they only stop or slow them. If each of them says “I’m okay with it if X,” the project lives.

- The scoreboard watchers: Finance and quality. They ask, “Show me ROI” and “Show me impact on metrics.” Sometimes that is probing; sometimes it is pure formality.

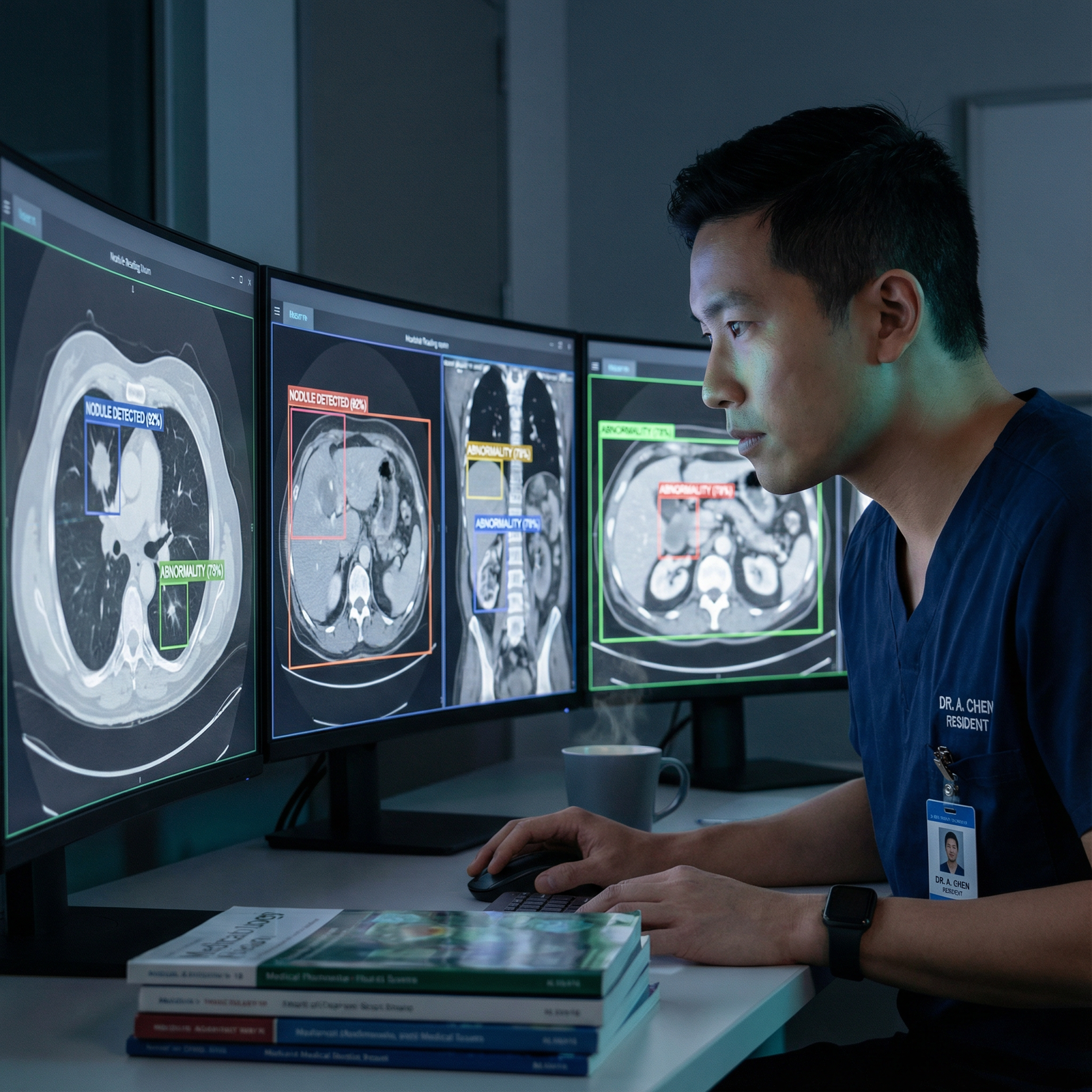

The residents, front-line nurses, and staff who will actually click those buttons? They show up after the contract is signed, in a training session they did not ask for.

Step 1: The “Pilot” That Isn’t Really a Pilot

The word “pilot” gets thrown around constantly. Do not be fooled. In most hospitals, “pilot” means “half-committed purchase with a face-saving exit ramp.”

Here is what usually happens behind the “pilot” label:

- The vendor is already deeply in the door—a formal RFP may or may not have happened.

- The contract often locks in a longer term with a grace period: “We will pilot for 6 months, then roll out systemwide if X.”

- Everyone talks about “rigorous evaluation,” but the success metrics are vaguely defined: “Provider satisfaction,” “impact on workflow,” “preliminary quality signal.”

You will see people nodding solemnly while saying things like:

“We’re going to treat this as an experiment. Data‑driven. If it doesn’t work, we shut it down.”

Then they do none of the things required to run an actual experiment.

There is rarely:

- A pre‑specified primary outcome.

- A clearly defined comparator group.

- A power calculation.

- A pre‑registered analysis plan.

Instead, six months later someone says, “The users seem to like it, and the early numbers look encouraging,” and the pilot quietly becomes permanent.

| Step | Description |

|---|---|

| Step 1 | Vendor intro |

| Step 2 | Leadership interest |

| Step 3 | Pilot agreement signed |

| Step 4 | Limited rollout |

| Step 5 | Soft, mixed outcomes |

| Step 6 | Quietly sunset |

| Step 7 | Expand and entrench |

| Step 8 | Any major disaster |

If you are wondering why so many mediocre AI tools keep spreading, that diagram is your answer.

What Actually Gets Evaluated (Versus What Everyone Pretends)

Vendors love to throw AUROC curves, p‑values, and case studies at you. Those matter much less than you think.

Here are the real filters most hospitals use, in the rough order they happen:

1. “Will this embarrass us?”

This is the first, unspoken gate.

They ask:

- Has this been installed at other recognizable systems? (Someone inevitably says, “Do Mayo or Cleveland Clinic use it?”)

- Has any public scandal happened with this vendor?

- Is the risk of a New York Times piece low?

If the vendor passes the “New York Times test,” they move on.

2. “How hard will this break our EHR and IT stack?”

Epic, Cerner, Meditech, whatever you run—it dominates the discussion.

Questions that matter:

- Is there a native EHR module that sort of does this already? If yes, specialty vendors start the race behind.

- Does it use SMART on FHIR, HL7, or is it some brittle one-off interface that will crash with every upgrade?

- Will our already-overloaded IT team need to babysit it?

This is where many technically impressive AI startups die. They underestimate the sheer inertia of an EHR with 20 years of custom tweaks and local hacks.

| Factor | Integrated with EHR Vendor | Standalone AI Platform |

|---|---|---|

| IT burden | Lower | Higher |

| Clinician adoption | Higher | Variable |

| Speed of deployment | Faster | Slower |

| Customization | Limited | Greater |

| Political safety | Higher | Lower |

3. “Will clinicians actually touch it?”

This is where the CMIO and service line chiefs stop being polite.

You will hear comments like:

- “If it lives outside the main workflow, it will not be used.”

- “That’s three more clicks; our residents are already mutinying.”

- “Alerts? God help you if you add more alerts.”

The real bar is: Can you slip this into existing muscle memory with minimal new friction?

A shockingly large number of AI tools get rejected not because their models are bad, but because their buttons are in the wrong place.

4. “Can we afford to be wrong in this direction?”

Here is the secret nobody spells out: model performance is evaluated through the lens of liability more than pure statistics.

For example:

- A sepsis early warning model with high sensitivity but many false positives might be accepted—clinicians can always ignore alerts.

- A discharge prediction model that occasionally flags someone as “safe” who bounces back to the ICU the next day? Terrifying. The risk lives on the front page of quality dashboards and potential root cause analyses.

So they ask:

- “What happens when the model is wrong?”

- “Who is on the hook—clinician or institution?”

- “Does this change documentation, billing, or standard of care expectations?”

Almost no vendor slide deck answers those explicitly. But in closed‑door meetings, that is what determines whether a “great model” ever touches a patient.

5. “Can we show ROI quickly enough to keep leadership interested?”

Finance does not need perfect ROI. They need defensible ROI.

That means:

- Some believable linkage to reduced length of stay, readmissions, denials, staffing costs, or malpractice risk.

- A timeline of 12–24 months to show visible impact.

- A price that is not absurd relative to an FTE.

| Category | Value |

|---|---|

| LOS reduction | 30 |

| Readmissions | 20 |

| Staff productivity | 25 |

| Revenue capture | 15 |

| Legal/risk reduction | 10 |

If the vendor’s promised gains sound like wishful thinking, leadership will mentally discount them by 50% and ask, “Does it still make sense?”

Dirty Secret: Most “AI Evaluation Committees” Are Political Theater

You will hear about “Digital Health Councils,” “Clinical Decision Support Committees,” “AI Governance Boards.” I have sat in those.

Let me tell you what really happens in a lot of systems:

- The real decision was 80% made in a hallway conversation between a C‑suite exec and a powerful service line chief.

- The formal committee is there to create the appearance of due process and spread responsibility.

- The people in the room know this, but they go through the motions—discussion, pros/cons, “we’ll pilot and reassess.”

Once an influential radiology chair says, “We’ve already started talking to Vendor X; several of my docs are very excited,” it is uphill for any alternative.

Occasionally, a strong-willed CMIO or a stubborn security officer will legitimately block a tool. But most of the time, the committee’s job is smoothing, not choosing.

The Compliance and Ethics Layer: Often Late, Sometimes Fatal

AI ethics looks great in slide decks. In practice, it often shows up late in the game.

Typical pattern:

- Vendor has already done internal demos.

- A draft contract is floating around.

- Then someone says, “We should probably run this by legal, compliance, and the ethics committee.”

Now the awkward questions appear:

- “Where was this model trained? Are there demographic biases?”

- “Is patient data leaving the country? Is it used to further train their model?”

- “What’s our explainability story if a patient asks why the AI suggested X?”

Sometimes the answers are hand-wavy. Sometimes they are flat-out terrifying.

This can derail a deal fast when:

- The vendor cannot clearly state where data lives.

- There is any whiff of “we own your data and can use it however we want.”

- There is no process for patients to opt out, and your region has aggressive privacy regulators.

The irony? Many hospitals only properly harden their AI governance after they get burned once.

How Bias and Inequity Are Quietly Handled (Or Not)

Most leadership teams have now heard the word “bias” enough to be anxious. But “handling” it ranges from serious to laughable.

Here is what serious looks like (rare but growing):

- The data science or quality team independently tests the AI model’s performance across race, gender, age, language groups using local data.

- They look for systematically worse sensitivity/specificity in subgroups.

- They require the vendor to share performance by subgroup from their own validation.

Here is what common looks like:

- The vendor has a slide that says, “We checked bias and it looks good.”

- Someone on the committee says, “We should keep an eye on equity impacts.”

- Nothing structured is actually implemented to monitor that after go-live.

And then, a year later, someone in primary care notices that the AI outreach for non-adherent patients is not catching non-English speakers as well. But by then, the tool is entrenched and changing vendors is politically expensive.

Implementation: Where Tools Succeed or Quietly Die

Getting selected is only half the game. Many AI tools “pass” evaluation, then fail in the wild.

The killer variables here are:

Workflow design

If implementation is:

- IT-led with minimal clinical input.

- Dropped in as “Here’s a new feature, please use it.”

- Tracked on adoption metrics but not outcome metrics.

You can predict the result: login fatigue, button fatigue, and eventual quiet neglect. The AI technically exists but might as well not.

Strong implementations do something different:

- They shadow actual clinicians to see where decisions are made.

- They remove other steps when AI is added (trade, not addition).

- They alter policies and SOPs to explicitly reference the tool.

Training and culture

Another ugly truth: you can have the smartest model on earth; if the local culture is, “I do not trust black box tools,” usage will be cosmetic.

You hear:

- “I’ll click it, but I still do what I was going to do.”

- “It’s just another thing to ignore.”

- “If this goes badly, they will blame me, not the model.”

The few sites that really get value from AI usually have:

- A respected local clinician champion who actually uses the tool and pushes peers.

- Transparent conversations about when to follow vs. override the AI.

- Feedback loops: “The model missed this cardiac case—let’s review why.”

| Category | Value |

|---|---|

| Go-live | 70 |

| Month 1 | 50 |

| Month 3 | 40 |

| Month 6 | 30 |

| Month 12 | 25 |

That chart isn’t hypothetical; those are roughly the usage curves I’ve seen more than once. High curiosity at go-live, then relentless decay—unless someone is actively tending the fire.

What’s Changing in the Next 3–5 Years

Here is the part you actually care about: how this whole messy process is going to evolve.

Several trends are already reshaping how AI gets picked inside hospitals:

- Central AI governance boards with teeth. Not just window-dressing committees—structures that can say “no” even when a powerful service line wants “yes.” They will demand actual metrics, bias checks, and sunsetting criteria.

- Model marketplaces within EHRs. Epic, Cerner, others are building curated AI catalogs. That tilts the playing field heavily toward tools that play nicely in their ecosystem and share revenue.

- Payors and regulators stepping in. Once CMS starts tying reimbursement or penalties to how AI is used—or misused—you will see a lot more rigor and documentation.

- Local data fine-tuning. Hospitals are starting to insist: “We want to adapt your model to our data and measure our performance, not rely on external validation alone.”

- Front-line user veto power. Burned once by unusable decision support, some systems are giving superusers and clinician workgroups explicit veto influence before go-live.

| Step | Description |

|---|---|

| Step 1 | Identify clinical need |

| Step 2 | AI governance review |

| Step 3 | Market scan and shortlist |

| Step 4 | Local data validation |

| Step 5 | Bias and safety testing |

| Step 6 | Pilot with defined outcomes |

| Step 7 | Scale and monitor |

| Step 8 | Modify or retire |

| Step 9 | Targets met |

Will it be this clean? Of course not. But the direction of travel is unmistakable: less “shiny object,” more “prove it, measure it, and own the consequences.”

If You’re Inside a Hospital: How to Actually Influence This

If you are a med student, resident, nurse, or attending wondering how to shape the AI tools that end up haunting your workflow, here is the unvarnished play:

- Stop reacting at the last minute. Get yourself on the committees early. Even as a trainee, you can usually find a way into a “clinical innovation” or “informatics” working group.

- Frame every comment in terms leadership cares about: metrics, liability, staff burnout, patient safety. Complaining about “clicks” is less powerful than, “This will decrease time with patients and increase documentation errors.”

- Demand real pilots: “What is our primary outcome? How will we decide if it worked? What’s the deactivation plan if it does not?”

- Ask the awkward data questions: “How does this perform across our demographic subgroups?” You will be surprised how quickly that changes the tone.

- Identify one or two tools you like and visibly champion them. Being known as the person who both supports good tools and blocks bad ones is how you earn real influence.

FAQ: Behind-the-Scenes Questions People Actually Ask

1. Do hospitals really understand the AI models they are buying, technically?

Usually, no. A few academic centers have serious ML talent who dig into architectures and training data. Most community and even many large systems rely on vendor explanations and maybe one or two internal data-savvy folks. The core decision-makers care far more about integration, workflow, liability, and reputational risk than they do about which flavor of neural net is under the hood. The phrase “FDA cleared” gets thrown around like a quality stamp, even when leadership does not fully understand what that clearance actually covered.

2. How much does vendor brand name actually matter?

A lot more than anyone admits out loud. A mid-tier model from a big, safe-feeling vendor that already has a master services agreement with the hospital will beat a slightly better model from a scrappy startup more often than not. Why? Contracting is easier, perceived risk is lower, and if something goes wrong leadership can say, “Everyone uses them.” This is pure career risk management, not technical evaluation, and it is everywhere.

3. Are doctors being replaced by these AI tools in hospital planning?

No. Not in the way the headlines imply. Internally, the conversation is almost always about augmentation: “How do we support clinicians, reduce burnout, standardize decisions?” Nobody in serious leadership thinks a sepsis model or radiology triage tool will let them fire half their staff. What you do see is quiet pressure: “Do we really need as many scribes?” or “Can we manage more patients with the same staffing?” The threat is more about workload creep and surveillance than outright replacement.

4. Why do some obviously bad AI implementations survive for years?

Because once a tool is baked into documentation templates, billing workflows, or quality dashboards, ripping it out is painful. There is also ego and politics: the executive who championed it does not want to admit it failed; the department that built protocols around it has no appetite to redo them. So you end up with zombie AI—tools that everyone grumbles about but no one owns strongly enough to kill. Unless there is a clear safety incident or public controversy, inertia usually wins.

5. What will make hospitals actually reject more AI tools upfront in the future?

Three things: regulatory teeth, public failure, and comparative data. When a few widely publicized harms linked to poorly-governed AI hit the news—and they will—boards will start demanding proof of safety and equity before go-live, not after. When CMS or payors tie reimbursement to documented oversight of AI, compliance people will gain leverage. And as independent studies start comparing AI vendors head‑to‑head using real-world performance, it will be harder for slick marketing to carry weak products. At that point, the bar to get in the door will rise sharply.

You now know what most people in those conference rooms will never say on the record.

The next wave of AI in healthcare will not be won by the smartest models. It will be won by the tools that survive these very human, very political selection processes and still manage to deliver real value on the floors and in the clinics.

With that picture in your head, you are better prepared for what is coming—the governance battles, the workflow wars, and the chance to shape tools that actually help instead of hinder. How you use that leverage, and what kind of AI-enabled practice you help build, is the next part of the story.