The most dangerous thing about newly accredited programs is not the low case volume. It is applicants misreading the case volume data and thinking they are safe.

You are not.

New residency programs live and die on clinical exposure. But the way applicants skim ACGME case logs, GME brochures, and glossy slide decks would make any seasoned program director wince. I have watched very smart people match into new programs based on wildly misinterpreted numbers—then spend three years trying to claw their way out of a weak training environment.

Let me walk you through the traps before you step into one.

The Biggest Misread: Confusing “Projected” With “Proven”

Most applicants make the same rookie mistake: they treat projected case volume like historical data.

You will see language like:

- “Expected 1,200 major cases per resident by graduation”

- “Projected 250 deliveries per resident per year”

- “Anticipated 300 cataract surgeries over training”

Here is the problem: those numbers are often math on paper, not cases on the board.

How those “beautiful” numbers are usually built

I have seen the spreadsheets. They often look like this:

- Take hospital’s total annual volume (e.g., 5,000 surgeries)

- Divide by number of residents they hope to have at full complement (e.g., 15)

- Assume perfect distribution

- Assume no change in referral patterns

- Assume no faculty turnover

- Assume no competing learners (fellows, other residents, APPs)

Then someone turns that into a neat bullet on a recruitment slide.

That is not case volume. That is wishful arithmetic.

You should be asking:

- How many residents are currently on service?

- How many logged cases did the most senior class actually complete last year?

- What percentage of those were primary vs assist vs observer?

If they cannot show you real, anonymized ACGME case logs from current residents, you are looking at speculation.

| Category | Value |

|---|---|

| Projected | 1200 |

| Year 1 Actual | 650 |

| Year 2 Actual | 900 |

The mistake: believing the blue bar on the brochure instead of the ugly, half-filled bar of year-one reality.

Percentage Games: The “You’ll Get 80% of X” Trap

New programs love to say things like:

- “Residents will perform 80% of all hysterectomies”

- “Residents will handle 70% of ED procedures”

- “Residents will be primary surgeons on the majority of cases”

Sounds powerful. Until you realize three things:

Percentages of tiny numbers are still tiny.

You can get 100% of 40 appendectomies and still be underprepared.Percentages hide competition.

80% for residents—of what is left after fellows, private attendings, and advanced practice providers take their share.Percentages are often aspirational, not measured.

Ask: “How did you calculate that 80%? Show me last year’s breakdown.”

Here is how you should translate those claims in your head:

“Residents will do 80% of our joint replacements”

→ “Prove it with case logs and faculty assignment patterns.”“Residents handle most of the deliveries”

→ “What is the actual number per PGY level over the last 12 months?”

Never let a percentage distract you from the raw count per resident.

The Single-Site Illusion: Thinking One Busy Hospital Solves Everything

A common sales pitch for new programs:

- “We are the only residency drawing from this hospital.”

- “No competing residencies—you will own the OR.”

- “One main site, everything centralized, very efficient.”

This sounds ideal. Until you realize:

One hospital rarely has balanced pathology.

You might get endless bread-and-butter and very little complexity. Or the opposite.Subspecialty gaps are real.

That gleaming community hospital may not do:- Complex oncologic resections

- Transplant

- Complex congenital cases

- High-risk OB, ECMO, Level I trauma, etc.

External rotations are not guaranteed or equal.

Being “in negotiation” with a tertiary center is not the same as having a signed, functioning rotation where current residents are already logging cases.

You must look for:

- Written, active affiliation agreements (not just “we plan to…”)

- Residents currently rotating there

- Case logs specifically from those rotations

Do not assume “one busy site” is enough for a well-rounded training in 2026 medicine. It usually is not.

The Per-Resident vs Program-Level Confusion

This one burns people every year.

Programs proudly report:

- “Our service handles 3,500 deliveries annually.”

- “We perform 8,000 surgeries per year.”

- “Trauma center with over 2,000 activations annually.”

Applicants hear that and mentally convert it to “I’ll be drowning in cases.”

Wrong conversion.

You need per-resident numbers, not program-level volume. Huge difference.

| Metric | Looks Good On Paper | What You Actually Need |

|---|---|---|

| Total annual surgeries | 8,000 | Surgeries per PGY-3 |

| Total deliveries | 3,500 | Deliveries per grad |

| Trauma activations | 2,000 | ED/trauma shifts per resident |

| ICU admissions | 1,200 | Procedures per resident |

Questions you are not asking—but should:

- How many residents per year when fully built?

- How many fellows overlap that same case pool?

- How are cases assigned between learners?

- Who logs as primary when two residents scrub?

Do not be fooled by a big hospital volume banner. Ask them to translate that to average logged cases per graduating resident, ideally with the first cohort as proof.

Growth Projections: Believing “We’re Expanding” Fixes Everything

Program directors love this one:

- “We are adding two new surgeons next year.”

- “We just opened a new cath lab.”

- “We are becoming a Level II trauma center.”

Expansion is good. But assuming you will personally benefit from that expansion is a mistake.

Here is how growth projections mislead:

Timeline mismatch.

By the time the new service line matures, you may be graduating.Resident cohort growth matches service growth.

They add surgeons and also increase class size. Your per-resident share may not improve.Referrals are not automatic.

Getting official designation (e.g., Level II trauma) does not mean volume appears overnight, or ever.

| Category | Annual Cases | Total Residents |

|---|---|---|

| Year 1 | 5000 | 9 |

| Year 2 | 5500 | 12 |

| Year 3 | 6000 | 15 |

| Year 4 | 6500 | 18 |

On a graph like that, volume grows. Residents grow faster. Per-resident exposure barely moves.

Your move: force them into specifics.

- “What were logged case numbers for your current PGY-3s?”

- “With your planned growth in class size, what is the projected case-per-resident trend?”

- “Have you modeled this, or is this a verbal estimate?”

If they go vague, treat their “growth” pitch as marketing, not planning.

The “ACGME Minimums = Enough” Fallacy

Too many applicants think:

“As long as they meet ACGME minimums, I will be fine.”

No. That is how you graduate technically board-eligible but practically undertrained.

Remember:

- ACGME minimums are safety floors, not targets for quality programs.

- Mature, strong programs expect you to far exceed those numbers.

- New programs often aim for “we meet the minimums” their first few cycles. That can lock you into mediocrity.

I have seen first graduating classes with logs that made everyone uncomfortable:

- Barely above minimum for key index procedures

- Missing exposure to certain core pathologies

- Heavy tilt toward low complexity or narrow case mix

When evaluating a new program, you should be asking:

- “Where did your last graduating class fall relative to ACGME minimums—50% above? 100% above?”

- “For key index procedures, what is your median and range per graduate?”

If they say, “We are on track to meet minimums,” treat that as a warning sign, not a reassurance.

Misreading Autonomy From Case Numbers Alone

Another rookie error: assuming high volume automatically means high autonomy.

You will hear:

- “Residents do a ton of cases here.”

- “You will be in the OR constantly.”

- “We do not have fellows, so residents get all the cases.”

None of that tells you:

- Who is holding the knife

- Who is retracting

- Who is closing skin while the attending dictates notes

More cases logged as “assistant” or “other” do not fix poor autonomy. A new program with anxious faculty may cling to the case, especially early cohorts when faculty do not yet trust the training pipeline.

You need to drill down:

- “For your current PGY-3/4s, what percentage of their key procedures are logged as primary vs assistant?”

- “When do residents usually start performing [core procedure] skin-to-skin?”

- “Do juniors ever bump seniors or get bumped by attendings for high-yield cases?”

If all you see is a big total number without breakdown by role, you are flying blind.

Ignoring Competition From Non-Resident Learners

Applicants obsess over “no other residency program here” and ignore a bigger shark: non-resident learners.

In new programs, case competition often comes from:

- Fellows (often added after the residency is created)

- Nurse practitioners and PAs with procedural privileges

- Hospitalists who do procedures previously done by residents

- Private groups that selectively involve residents only in low-yield cases

I have watched brand-new surgical residents surprised to discover:

- APPs doing most central lines in the ICU

- Interventional radiology swallowing many bread-and-butter procedures

- CRNAs taking all interesting airways on certain services

You must ask explicitly:

- “Which procedures are primarily done by APPs vs residents?”

- “Do any services restrict resident involvement to certain case types?”

- “Have there been conflicts over procedural opportunities, and how were they resolved?”

If the answer is vague or defensive, assume your access will be weaker than advertised.

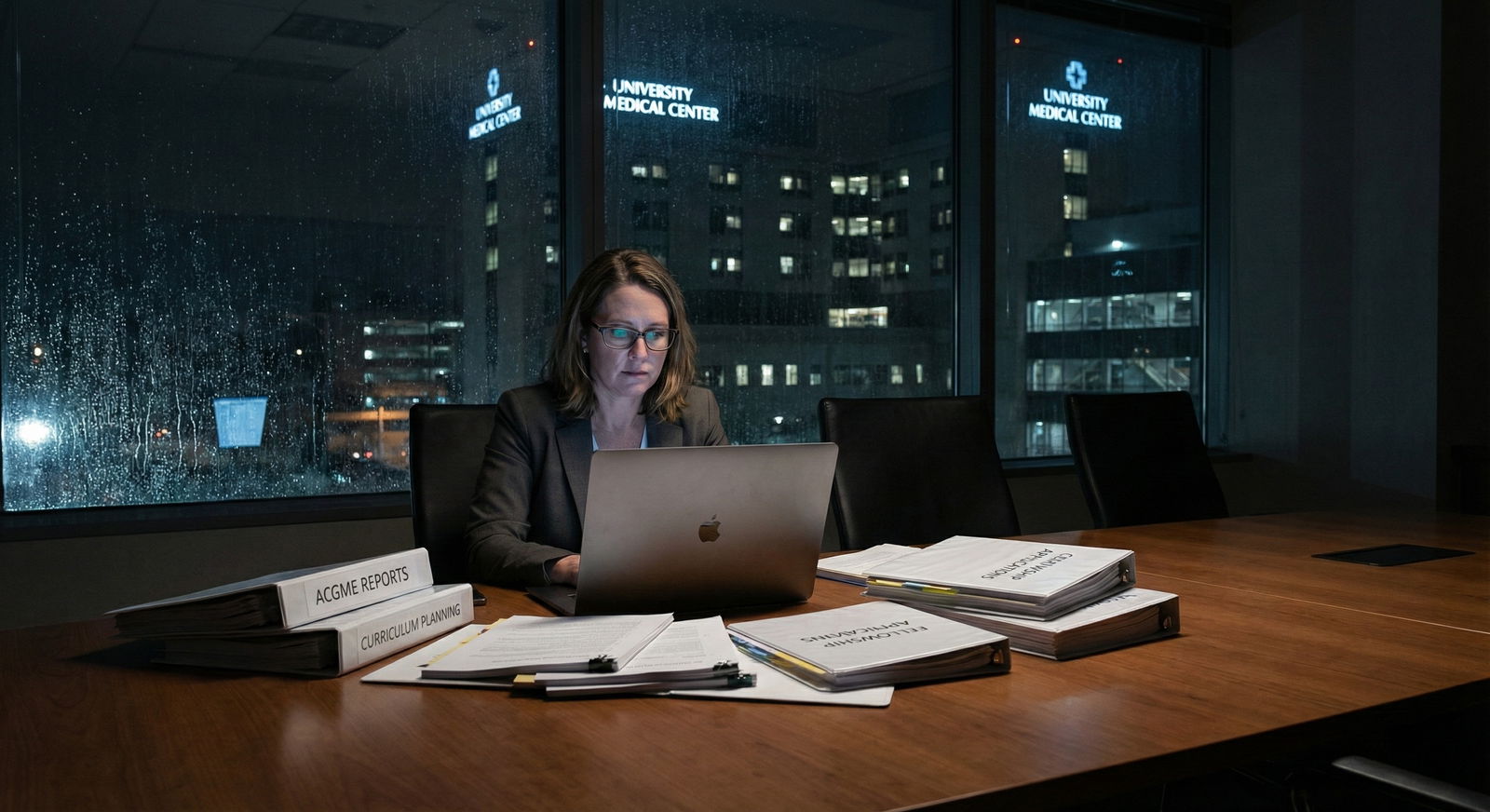

Overtrusting Attractive Dashboards and Marketing Slides

Let me be blunt: hospitals know how to design pretty dashboards that hide ugly truths.

You will see:

- Perfectly rounded averages: “Residents perform ~300 cases/year”

- Nice icons and color gradients

- Pie charts that show “balanced exposure” but no absolute values

Those graphics rarely tell you:

- Distribution (are a few residents doing 80% of the complex stuff?)

- Outliers (did someone graduate with dangerously low numbers?)

- Trends (is volume shrinking since service lines changed?)

Ask yourself:

- Did they show you raw case numbers or just sleek visuals?

- Did they discuss their worst years or only their best projections?

- Did residents echo the story, or did their faces tighten when you asked specifics?

Pretty slides are designed to sell you. You are responsible for insisting on actual data.

Failing to Cross-Check Resident Stories With Data

Some of you will make this mistake: you will fall in love with one enthusiastic resident and ignore the numbers.

I have seen first- and second-year residents in new programs who are genuinely excited. They like:

- Individualized attention

- Being “founding residents”

- Close relationships with attendings

That is real. But their perspective on case volume is often:

- Short-term (they have not yet hit senior-level expectations)

- Narrow (they have not rotated at a mature reference program)

- Biased (they want you to match there; they are invested in program success)

Your job:

- Talk to multiple residents at different PGY levels

- Ask each one for specific ranges of case numbers they have logged

- Compare what residents say to any numbers/program slides you saw

- Residents say “we are very busy” but cannot give even approximate case numbers.

- Different residents give wildly discordant stories.

- Seniors avoid hard questions about whether they feel ready for independent practice.

If the stories and numbers do not line up, trust the inconsistency as the message.

Skipping the “Worst-Case Scenario” Question

New programs are unstable by definition. Things can go wrong:

- Key faculty leave

- Hospital merges or changes service lines

- Certain procedures are centralized elsewhere

- Accreditation issues tighten case access

Most applicants never ask:

“If volume does not grow as expected, what concrete steps will you take to protect my training?”

You must push them:

- Do they have backup rotation sites ready?

- Do they have contingency plans written down?

- Have they had to remediate low case exposure for anyone yet?

| Step | Description |

|---|---|

| Step 1 | Program Starts |

| Step 2 | Standard Training |

| Step 3 | Identify Gaps |

| Step 4 | Send Residents Externally |

| Step 5 | Residents Undertrained |

| Step 6 | Difficulty With Boards or Jobs |

| Step 7 | Volume Meets Projections |

| Step 8 | Backup Sites Available |

If their answer is purely optimistic—“We just know things will grow”—that is not a plan. That is denial.

How To Read Case Volume Data Correctly In New Programs

Here is how you avoid getting burned.

1. Demand per-resident, per-year numbers

Not:

- Total hospital volume

- Percentages

- “Estimated per resident”

You want something like:

- “Our current PGY-3s have logged a median of 550 major cases (range 480–620).”

- “Our first graduating class averaged 250 colonoscopies and 80 EGDs as primary operator.”

2. Compare to benchmarks from mature programs

Use:

- ACGME national reports (many specialties publish aggregate case data)

- NRMP and specialty societies that sometimes share expected exposure

- Public numbers from long-established programs (often in presentations or reviews)

You are looking for: “Do their numbers look like a 3rd percentile program trying to survive or a 50th–75th percentile program in training quality?”

3. Ask for trends over time, not static snapshots

Insist on:

- Year-by-year case numbers per resident class

- Any major service line changes and how they affected exposure

- Whether trends are improving, plateauing, or dropping

| Category | Value |

|---|---|

| Class 1 | 850 |

| Class 2 | 950 |

| Class 3 | 1100 |

| Class 4 | 1200 |

If they cannot show you even a primitive version of that, they are not tracking what actually matters.

4. Separate marketing language from data language

Learn to mentally discount phrases like:

- “Broad exposure”

- “Hands-on experience”

- “High volume”

- “Strong operative experience”

Only trust:

- Numbers

- Ranges

- Comparisons to recognized standards

- Honest acknowledgment of current weaknesses and specific plans to fix them

A program that openly says, “Our vascular exposure is currently light; here is exactly how we are expanding it and how our partners cover the gap for now” is safer than the shiny brochure that claims excellence in everything.

FAQ (Exactly 5 Questions)

1. Are new residency programs always worse for case volume than established ones?

Not always. A new program in a previously unexploited high-volume hospital can actually offer excellent exposure, especially early on with small class sizes. The danger is volatility and uncertainty, not inevitability. Some new programs overshoot projections and thrive; others stumble badly. You cannot rely on the label “new” or “established.” You must look at per-resident case logs, trends, and how honest the leadership is about current gaps.

2. If a program cannot show me complete case logs yet, is that an automatic red flag?

Not automatic, but it is a serious caution. For a brand-new program with no graduates, you at least want partial data from current PGY-2s or PGY-3s and some early trends. If all they have are hospital-wide volumes and projections, you are making a high-risk bet. At minimum, you should downgrade your expectations and have a compelling reason—location, personal circumstances, specialty competitiveness—to accept that uncertainty.

3. How much above ACGME minimums should I expect to feel “safe”?

There is no universal number, but if a program is hovering near minimums for core procedures, you should be worried. Many strong programs aim for residents to be 50–200% above minimums in key areas. The exact figure depends on specialty and procedure, but your mindset should be: “Minimums keep you from being clearly deficient; they do not guarantee you are competitive or comfortable.” If a new program proudly states they “meet the minimums,” press them hard about where they expect to land in a few years and how.

4. Are external rotations a reliable way for new programs to fix low case volume?

Sometimes, but not automatically. External rotations vary wildly in quality and resident role. You need to know: Are you a true participant or just an observer at the host site? Are there already home institution residents or fellows who get priority? Is housing, travel, and scheduling realistic? Well-structured external rotations with solid agreements can rescue a weak home volume. Vague “plans” to send you elsewhere rarely do.

5. What is the single best question to expose misleading case volume data?

Ask this:

“Can you show me anonymized, real ACGME case logs or summary statistics for your current senior residents and walk me through where those numbers fall relative to national averages and your own goals?”

A confident, transparent program will have some version of an answer and data. A program that starts hand-waving, changing the subject, or hiding behind “we are still building” is telling you a lot—without meaning to. Believe that. Then decide if you are willing to gamble your training on it.

If you remember nothing else:

- Projected volume is not real volume. Demand per-resident, per-year numbers.

- ACGME minimums are a floor, not a goal. You want programs that clearly exceed them.

- Beautiful hospital volume and pretty dashboards do not train you. Actual logged cases in your name do.