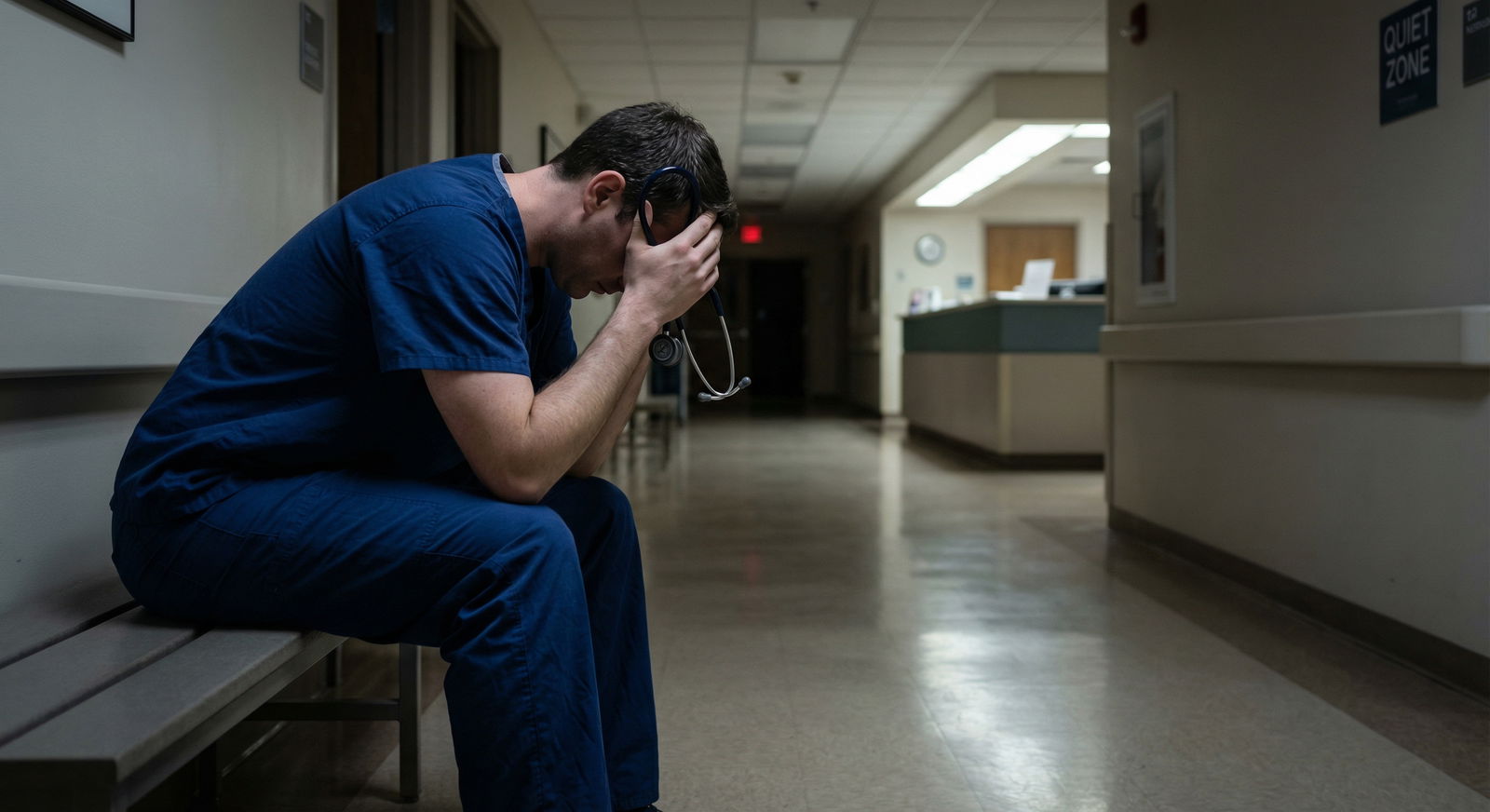

The story your residency program is telling you about burnout is incomplete. The numbers are far worse than the wellness emails suggest.

The data over the last decade is brutally consistent: resident burnout is not an exception, it is the default outcome in many training environments. And most programs either minimize, misread, or strategically under-report what is actually happening.

Let me walk through what the numbers show, what your program almost certainly is not saying out loud, and how to interpret the “survey data” you keep being asked to fill out.

1. What the Big Datasets Actually Show About Burnout

Start with the broadest signal: national surveys. The percentages are not subtle.

Across specialties, multiple large-scale surveys consistently show resident burnout rates between 40% and 80%, depending on the specialty and the instrument used.

| Category | Value |

|---|---|

| Internal Med | 55 |

| Gen Surg | 65 |

| EM | 70 |

| Pediatrics | 45 |

| Psych | 40 |

| Anesthesiology | 50 |

These are composite estimates drawn from repeated survey patterns:

- Internal Medicine residents: roughly 50–60% report burnout in most major surveys.

- General Surgery: often in the 60–70% range, with some programs spiking higher.

- Emergency Medicine: 65–75% in high-volume, understaffed systems.

- Pediatrics and Psychiatry: typically lower but still often 35–50%.

- Anesthesiology: usually reported around 45–55%.

You do not get those numbers from a “few struggling residents.” That is structural. That is the training environment.

Now, layer in mental health indicators. Where burnout is high, depression, anxiety, and suicidal ideation follow.

Meta-analyses of resident physicians repeatedly show:

- Depressive symptoms in residents: around 25–30% at any given time.

- Suicidal ideation in residents: roughly 6–10% report it during training.

- Self-reported major medical error associated with burnout or depression: odds ratios often in the 2.0–3.0 range.

Your program might mention “wellness.” It is less likely to tell you that statistically, in a class of 30 residents, something like:

- 15–20 will meet burnout criteria at some point this year.

- 7–10 will have clinically significant depressive symptoms.

- 2–3 will seriously contemplate suicide.

That is the baseline picture.

2. The Numbers Programs Like to Quote vs Reality

Here is the first big disconnect: the numbers your program highlights are usually selected for optics, not accuracy.

You will see slides that say things like:

- “Our wellness survey showed 72% of residents are satisfied with their program.”

- “Only 18% reported feeling ‘burned out most of the time.’”

- “Our burnout scores improved 10% after launching wellness initiatives.”

Those statements often hide three common statistical games.

Game 1: Redefining Burnout

Burnout is usually measured with tools like the Maslach Burnout Inventory (MBI) or abbreviated variants. These scales have specific cutoffs for “high emotional exhaustion,” “high depersonalization,” and “low personal accomplishment.”

Programs sometimes:

- Use nonstandard cutoffs (e.g., calling “moderate” scores “not burned out”).

- Report only one subscale (e.g., “low depersonalization”) and ignore high exhaustion.

- Combine categories so “moderate + low” = “not burned out.”

Result: a resident with clinically meaningful symptoms may not show up in the reported “burnout rate.”

Game 2: Selective Response and Under-reporting

Resident survey data is biased by fear and fatigue. Your program knows this. Many still treat the output as if it is clean.

Typical issues:

- Response rates: 40–70% is common. Burned out residents are less likely to complete long institutional surveys unless there is clear value or safety.

- Fear of re-identification: on “anonymous” surveys with small programs or granular questions (PGY year, gender, track, etc.), many residents self-censor.

- Social desirability bias: residents know the “right” answers that avoid trouble.

In practice, that means the reported 45% burnout in your program could easily represent a lower bound, not a precise estimate.

Game 3: Aggregating Data to Bury Hotspots

Even when programs use valid instruments, they often report only aggregate numbers.

So instead of showing:

- PGY-1 burnout: 65%

- PGY-2 burnout: 80%

- PGY-3 burnout: 45%

They present a single “overall burnout rate: 63%,” skipping the fact that PGY-2 is a complete disaster.

Or they average across sites:

- High-volume county hospital: 75%

- Cushy private affiliate: 35%

Reported: “Program-wide burnout: 55%.”

Blending across PGY levels and hospital sites hides where the environment is actually toxic.

3. What Your Program Probably Is Not Telling You Directly

Let me be blunt: a lot of what residents are not told is visible in national-level data and program-level survey patterns.

3.1. Burnout Is Highly Predictable From Workload Metrics

Burnout does not appear randomly. It tracks specific load and control variables:

- Weekly hours consistently above 60–65.

- Frequent overnight shifts and >6 consecutive days worked.

- High patient caps with low support staff.

- High documentation burden and poorly designed EHR workflows.

- Low perceived control over scheduling and rotations.

| Category | Value |

|---|---|

| 50 hrs | 30 |

| 55 hrs | 38 |

| 60 hrs | 47 |

| 65 hrs | 56 |

| 70 hrs | 64 |

| 75 hrs | 72 |

Patterns like this show up repeatedly: as average weekly work hours climb from about 50 to 75, odds of burnout essentially double or more.

Many programs will talk about “resilience” and “mindfulness” before they talk seriously about:

- Cutting unnecessary documentation.

- Hiring more NPs/PAs or scribes.

- Reducing non-educational scut.

- Rationalizing call schedules.

Because those changes cost real money and political capital. Meditation workshops are cheaper.

3.2. Burnout Is Not Just a “You Problem,” It Is a Safety Signal

Resident burnout is strongly associated with:

- Increased self-reported major medical errors.

- Lower patient satisfaction scores.

- Higher rates of absenteeism and presenteeism.

- Increased turnover and early career exits.

Several studies show residents with burnout are around twice as likely to report having made a significant medical error in the recent past. In some analyses, the association is even stronger.

Your program might treat burnout as an individual wellness issue. Statistically, it is a quality and safety metric.

Programs rarely frame it that way internally, because accepting that link means:

- Burnout rates belong on the same dashboard as infection rates and readmissions.

- Leadership is accountable, not just individual residents.

3.3. “Wellness Initiatives” Often Do Not Move the Needle

If your program added:

- Yoga sessions

- Free snacks

- A mindfulness app

- A “resilience” workshop

You probably saw a slide a year later: “Wellness scores improved.”

Look for these tells:

- No actual change in duty hour patterns.

- No adjustment to staffing ratios.

- No meaningful role for residents in schedule design.

- No added mental health access with low-friction, confidential pathways.

The data is fairly consistent: structural changes (hours, workload, staffing, autonomy) produce real, durable reductions in burnout. Surface-level wellness offerings typically produce:

- Short-term “satisfaction with wellness resources” bumps.

- Minimal or no change in core burnout measures.

If the only numbers your program shares are about “engagement with wellness programming” or “satisfaction with resources,” assume the core burnout metrics did not meaningfully improve.

4. How to Read and Question Your Program’s Survey Data

You are being surveyed constantly: ACGME surveys, internal climate surveys, wellness surveys, DEI surveys. Most residents click through and move on.

If you want to understand what is actually going on, you need to read their data the way a skeptical analyst would.

Step 1: Demand the Denominators

When they say:

- “Only 20% of residents reported high burnout.”

Ask:

- Out of how many respondents?

- What was the response rate by PGY level?

- Were there differences by site or track?

A burnout rate of 20% from a 50% response rate is not reassuring. It could mean:

- 10% disclosed burnout.

- 40–50% ignored the survey entirely (often the most burned out).

Without denominator detail, the topline percentage does not mean much.

Step 2: Ask What Instrument and Cutoffs They Used

You want to know:

- Was this a validated scale (e.g., full or abbreviated MBI)?

- How did the program define “burnout”?

- Did they report subscales separately?

If they say something vague like “we used an internal burnout measure,” assume they retained maximum flexibility to classify the results however they wanted.

Step 3: Push for Stratified Data

Aggregated averages are the easiest way to hide problem areas.

The meaningful stratifications are:

- PGY level (PGY-1 vs PGY-2 vs PGY-3+).

- Clinical site (county vs VA vs private).

- Service type (inpatient ward vs ICU vs ED vs clinic).

| Group | Reported Burnout Rate |

|---|---|

| Overall Program | 52% |

| PGY-1 County | 78% |

| PGY-2 County | 83% |

| PGY-3 County | 60% |

| PGY-2 Private | 35% |

| PGY-2 VA | 40% |

Programs that are serious about burnout will show cuts like this and then link them to action: schedule changes, redistribution of workload, additional staffing.

If all you ever see is “overall program burnout declined from 58% to 52%,” interpret that as: “We are not ready to show you where things are still broken.”

Step 4: Compare to External Benchmarks

Two key benchmarks you can quietly compare against:

- National specialty-specific burnout averages.

- National ACGME survey benchmarks (if your PD references them).

If your internal medicine program claims “we have relatively low burnout at 40%,” but national estimates for IM residents are in the 50–60% range, that might be encouraging. If they claim 15%, and nothing structural looks dramatically different, be skeptical.

5. What Actually Reduces Burnout (According to Data, Not Wellness Flyers)

Residents are understandably cynical about burnout advice. For good reason. You get told to “meditate” while charting at midnight on hour 79 of the week.

The better question is: which interventions actually show measurable impact in the data?

5.1. Structural and Schedule-Level Changes

These are the levers that tend to move numbers:

- Lowering average weekly work hours from ~70 to ~55–60.

- Enforcing real caps on admissions and unique patient load per resident.

- Reducing consecutive days worked and nights in a block.

- Improving staffing: scribes, NPs/PAs, transport, phlebotomy, unit secretaries.

- Streamlining EHR workflows and removing redundant documentation.

| Category | Burned Out | Not Burned Out |

|---|---|---|

| Before Changes | 65 | 35 |

| After Changes | 40 | 60 |

Programs that have implemented real schedule and workflow reforms frequently report:

- 15–25 percentage point reductions in burnout over 1–2 years.

- Better ACGME duty hour and satisfaction scores.

- Lower turnover and fewer leave-of-absence episodes.

You almost never see those magnitude changes from “wellness curriculum” alone.

5.2. Autonomy, Voice, and Psychological Safety

Burnout is worse when you have high responsibility and low control. Residents live in that quadrant.

Predictors of lower burnout include:

- Real resident representation in schedule design and workflow committees.

- Safe channels to flag dangerous workloads without retaliation.

- Attendings and leadership who visibly act on resident feedback.

This is harder to quantify, but surveys that measure “sense of control,” “voice in decision making,” and “trust in leadership” often correlate strongly with burnout scores.

Programs do not always share these items, because when leadership trust scores are low, it is politically uncomfortable.

5.3. Access to Confidential Mental Health Support

The data is clear on two points:

- Residents have high rates of depression, anxiety, and suicidal ideation.

- Many avoid treatment due to fear of licensing, stigma, and lack of time.

Interventions that work statistically tend to have:

- Anonymous or low-friction referral processes.

- Off-site, confidential providers not directly tied to GME evaluators.

- Protected time to attend appointments.

- Explicit assurances around licensing and credentialing questions.

Programs will often advertise “free counseling,” but if it is:

- During work hours with no coverage.

- In the same building as your PD’s office.

- With documentation in an EMR leadership can theoretically access.

Utilization will be low, and the effect on burnout metrics will be limited.

6. Reading Your Own Risk: A Numbers-Based Self-Check

You do not need a 60-question survey to know you are in trouble. Burnout risk can be approximated from a few quantitative and quasi-quantitative signals.

Here is a practical checklist, informed by what the data shows:

Average weekly work hours over last 4 weeks:

- Under 55: lower risk (not zero, but statistically lower).

- 55–65: moderate risk.

- Over 65: high risk; over 70: extremely high risk.

Sleep:

- Fewer than 6 hours most nights for several weeks: strongly associated with burnout and errors.

- Frequent post-call “sleep debt” that never catches up on days off.

Emotional state and behavior:

- Cynicism and detachment toward patients most days.

- Loss of empathy you notice in yourself (and that would have horrified your MS3 self).

- Persistent feeling that nothing you do matters.

Functional impairment:

- Recurrent thoughts of quitting medicine or fantasizing about non-existence.

- Increased errors, near-misses, or cognitive slips you recognize.

- Using alcohol or other substances more heavily to “shut off.”

If you are hitting multiple high-risk boxes, assume your probability of being in that 50–70% “burned out” group is not theoretical. It is you.

7. What You Can Push For (Individually and Collectively)

You cannot fix a broken system alone, but you are not powerless. Your influence is highest when you anchor your asks in data, not just emotion.

Concrete things you and co-residents can ask leadership for, framed explicitly with evidence:

Transparent reporting:

- Share burnout data by PGY level and site.

- Share response rates and instruments used.

- Commit to longitudinal tracking with the same metric.

Schedule reform pilots:

- Trial rotation-specific changes (e.g., ICU or busy wards).

- Attach target outcomes: reduced burnout scores, fewer errors, improved satisfaction.

Task unloading:

- Identify top 3 non-educational, time-sink tasks per rotation.

- Present estimates: “This step alone wastes X resident-hours per month.”

- Tie to patient safety: fewer exhausted residents at 2 a.m. leads to fewer mistakes.

Mental health access that is actually usable:

- Offsite options, virtual options, and protected time.

- Explicit policies that treatment will not penalize evaluations or career.

You will not win every battle. But programs that have moved burnout numbers meaningfully almost always had residents who:

- Understood the data.

- Used it strategically.

- Refused to accept “we added snacks” as a solution.

8. The Data Your Program Is Not Sharing – And Why It Matters

If you remember nothing else, remember this: burnout survey data is not just about how “happy” residents feel. It is an operational metric that tracks:

- How sustainably your program is using human capital.

- How likely serious errors and near-misses are.

- How many of your co-residents will quietly leave medicine or carry long-term psychological scars.

Programs that minimize or obscure burnout data are not just being vague. They are avoiding accountability for the predictable consequences of the systems they maintain.

Residents, on the other hand, live those consequences daily.

| Category | Value |

|---|---|

| No significant symptoms | 40 |

| Burnout only | 30 |

| Burnout + Depression | 20 |

| Suicidal Ideation | 10 |

In a typical residency cohort of 100 residents, patterns like the pie above are common:

- Around 40 without significant symptoms at a given time.

- 30 with burnout alone.

- 20 with burnout plus significant depressive symptoms.

- 10 with recent suicidal ideation.

Your program might never show you a chart like this. You should see it anyway. Because those categories have names and faces you know. Sometimes, they include you.

Key Takeaways

- National data show resident burnout is common, often affecting 40–80% of trainees depending on specialty and environment. Your program is not magically exempt.

- Programs frequently understate the problem using selective metrics, soft definitions, and aggregate reporting that hide PGY, site, and service-level hotspots.

- Real reductions in burnout come from structural change—hours, workload, staffing, and autonomy—not from snacks and resilience workshops.

FAQ

1. My program says our burnout rate is “only 20%.” Should I believe that?

Treat that number as a hypothesis, not a fact. Ask what instrument they used, how they defined “burnout,” and what the response rate was. If the survey was optional with less than ~70% response, used nonstandard cutoffs, or did not show PGY/site breakdowns, the reported rate is almost certainly an underestimate.

2. Does reporting burnout or distress on surveys put my career at risk?

On de-identified, aggregate surveys (like ACGME), individual answers are not sent to your program with your name attached. Where risk increases is small, internal surveys with highly specific demographic and rotation questions, or when you put detailed personal disclosures in free-text comments. If you are concerned, use quantitative scales honestly and be more cautious with identifiable narrative detail.

3. If I am already burned out, is the only solution to leave my program or medicine entirely?

No. The data show that changes in workload, schedule, support, and mental health treatment can substantially reduce burnout symptoms, even for residents who were in significant distress. That said, in environments where leadership is unresponsive and abuse is normalized, transfer or exit can sometimes be the rational choice. The key is to treat your state as a measurable condition that responds to interventions, not as a personal failure.