The way most applicants rank residency programs is lazy and dangerously emotional.

You finish interviews, feel good (or bad) about a day, and then you “go with your gut.” That is how people end up in programs that looked shiny on interview day but are miserable to work at for four years.

You need a system. Not vibes.

This is where post‑interview notes come in. Done correctly, they give you an objective backbone for your rank order list (ROL) while still leaving room for your instincts. Done poorly—or not at all—they leave you vulnerable to recency bias, charisma traps, and fake “family” vibes.

Let me show you how to build a structured post‑interview note process that actually helps you rank programs rationally.

Step 1: Stop Trusting Your Memory

Your memory after 10+ interviews is garbage. Your brain will mesh programs together:

- The strong didactics from Program A get mentally attached to the warm residents from Program C.

- The awful call schedule from Program D somehow disappears.

- You remember the catered lunch, not the vague answer about fellowship match.

I have watched fourth‑years flip through their interview season trying to rank and say things like:

“Wait, was this the one where the PD was kind of weird? Or was that the other midwest program?”

That is not how you decide where to spend the next 3–7 years.

So rule one: your post‑interview note is the only memory that counts. Not the highlight reel in your head two months later.

To make that work, you must:

- Capture notes the same day as the interview (within 4–6 hours if possible).

- Use the same structure for every program.

- Separate facts from feelings.

We will build that structure now.

Step 2: Build a Standardized Post‑Interview Template

You need a template that you can fill out in 15–25 minutes right after each interview. Not a novel. Not scattered bullet points. A repeatable form.

Here is the core structure I recommend.

A. Core Data Snapshot

This is the “at a glance” section you will use later when comparing:

- Program name

- City / Region

- Specialty (if you interviewed at more than one)

- Interview date

- Track (categorical, advanced, prelim, etc.)

- Number of residents per year

- EMR / main hospital system

- Call schedule summary (e.g., “q4 28‑hr,” “night float PGY‑2+”)

- Unique features (e.g., “only trauma center in region,” “3+1 clinic model”)

Keep this short and factual. No opinions here.

B. Objective Domains (Scored)

Pick 6–10 domains that actually matter to your training and life. Not what sounds “prestigious” on Reddit. Use the same domains and scoring scale for every program.

Common domains that work well:

- Clinical Volume & Breadth

- Teaching & Didactics

- Resident Culture & Support

- Program Leadership & Stability

- Fellowship / Job Placement

- Workload & Schedule

- Wellness & Benefits

- Location & Cost of Living

- Research & Academic Opportunities

- Program Reputation (Real, Not Imagined)

Use a 1–5 or 1–7 scale. I like 1–5 because you will actually use it:

- 1 = Terrible / major concern

- 2 = Below average, noticeable weaknesses

- 3 = Acceptable / middle of the road

- 4 = Strong

- 5 = Outstanding

You will not use every domain for every specialty. For example, research might matter heavily for neurosurgery but be less critical for community FM if you do not care about academics. That is fine. You will weight them later.

C. Qualitative Notes: Facts vs Impressions

Split this section into two clearly labeled parts:

- Facts / Concrete Observations

- “Call: q4 28‑hr till January PGY‑1, then night float PGY‑2+”

- “No in‑house ICU fellows; residents run codes overnight”

- “Recent ACGME citation for duty hour violations (PD said resolved)”

- “Board pass rate 100% last 3 years”

- Impressions / Vibes (Unfiltered)

- “Residents looked exhausted but said they were ‘a family’ in a forced way”

- “PD gave generic answers, seemed distracted”

- “Felt genuinely welcomed; residents answered questions honestly”

- “City felt too small; partner may hate it”

You want both. The separation matters because when you review later, you may realize your impression was off, but the facts will still stand.

D. Critical Red Flags and Green Flags

This section forces you to explicitly identify deal‑breakers and strong positives:

Red Flags:

- “Multiple residents independently warned about malignant chief”

- “PD dodged question about recent resident attrition”

- “Residents discouraged moonlighting due to burnout levels”

Green Flags:

- “Residents repeatedly mentioned PD advocating with hospital admin for them”

- “Several graduates matched into top‑tier fellowships in my area of interest”

- “Flexibility for research/track built into schedule”

Limit yourself to 3–4 bullet points max in each. If everything is a red flag, that is its own answer.

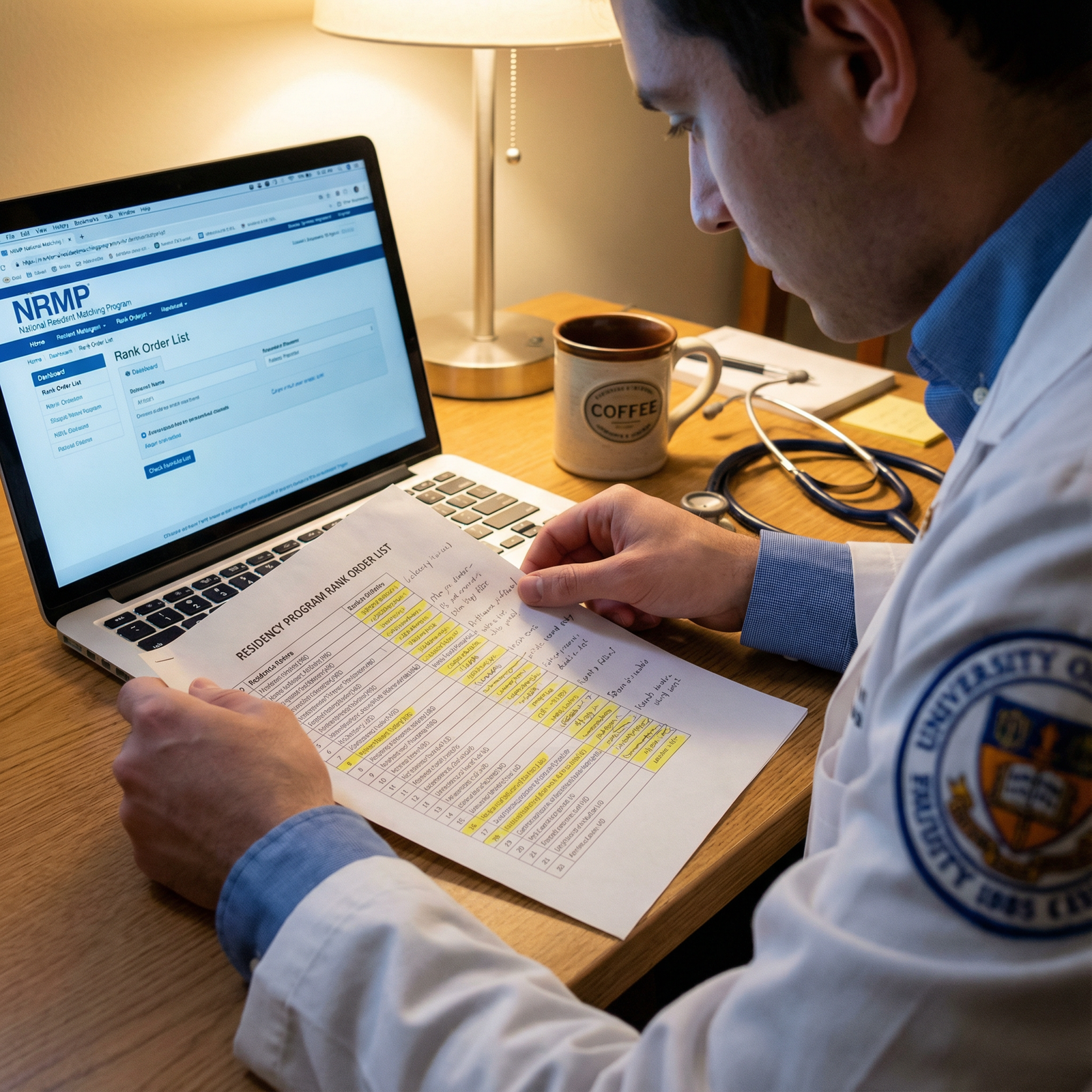

Step 3: Capture Notes the Same Day – Without Breaking NRMP Rules

You are not allowed to share rank intentions with programs, but you are absolutely allowed to take notes for yourself.

The workflow I recommend:

Before Interview Season Starts

- Create a master spreadsheet (Excel, Google Sheets, Notion, whatever) with one row per program and columns matching your template.

- Or, create a one‑page form (Word, Notion template, OneNote page) that you duplicate for each program.

Immediately After Each Interview (Same Day)

- Go to a quiet space (hotel room, car, airport gate).

- Spend 5 minutes brain‑dumping raw impressions in a blank section: what stood out, how you felt, anything weird.

- Then fill out your structured template from top to bottom.

- Score each domain BEFORE you talk to classmates about their experience at the same program.

Within 24 Hours

- Re‑read what you wrote.

- Ask: “Did I over‑ or under‑react to anything?”

- Adjust domain scores only if you realize you mis‑understood something factual.

Do not rely on scribbled notes in a folder you never open again. Make it searchable and sortable.

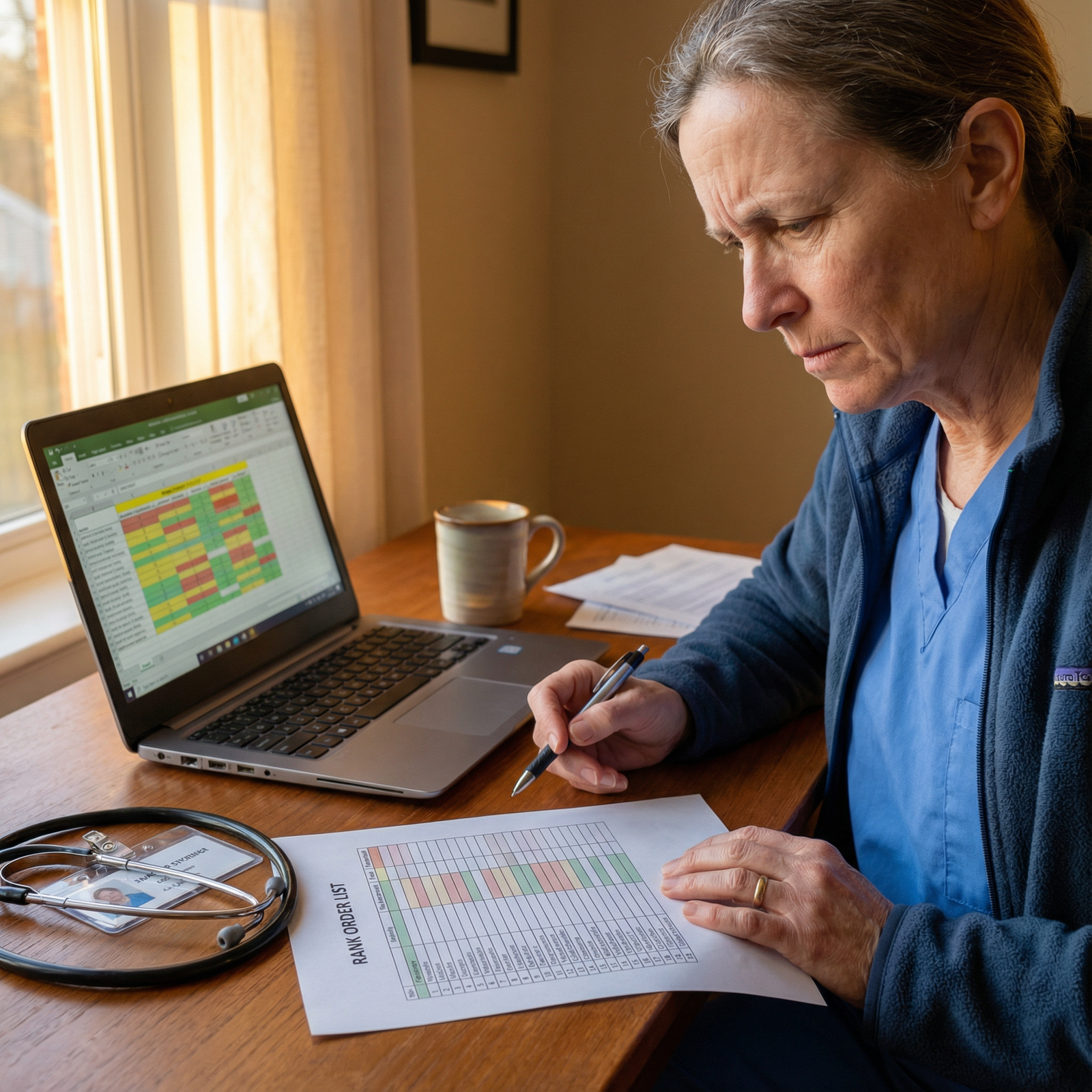

Step 4: Turn Notes into a Scoring System That Actually Works

Now the key move: you convert these notes into a weighted score for each program. Not because life can be reduced to a number. It cannot. But because numbers force you to confront your own priorities.

A. Decide Your Weights Before You Look at Scores

This is where many people lie to themselves.

If you ask them in October, they say, “Education and culture are what matter most.”

Then in February they rank the big‑name prestige program with toxic culture above the mid‑tier program where they were clearly happy.

So you decide weights before you calculate anything:

Example for Internal Medicine (just to illustrate):

- Clinical Volume & Breadth – 20%

- Teaching & Didactics – 15%

- Resident Culture & Support – 20%

- Program Leadership & Stability – 10%

- Fellowship / Job Placement – 10%

- Workload & Schedule – 10%

- Wellness & Benefits – 5%

- Location & Cost of Living – 10%

This is personal. A partnered applicant with kids in tow might put 25–30% on location and schedule. A single applicant dead‑set on competitive fellowship may push fellowship placement and research up to 40%.

But lock this before you bring specific programs into it, or you will rationalize.

B. Calculate Weighted Scores

For each program:

- Convert domain scores (1–5) to a 0–100 scale or use them as is.

- Multiply by the weight for each domain.

- Sum to get a total objective score.

Example: Program X

- Clinical Volume: 4 (weight 20%) → 4×0.20 = 0.8

- Teaching: 3 (15%) → 3×0.15 = 0.45

- Culture: 5 (20%) → 5×0.20 = 1.0

- Leadership: 4 (10%) → 4×0.10 = 0.4

- Fellowship: 3 (10%) → 3×0.10 = 0.3

- Workload: 2 (10%) → 2×0.10 = 0.2

- Wellness: 4 (5%) → 4×0.05 = 0.2

- Location: 3 (10%) → 3×0.10 = 0.3

Total = 0.8 + 0.45 + 1.0 + 0.4 + 0.3 + 0.2 + 0.2 + 0.3 = 3.65 / 5

You can convert that to 73/100 if that helps you mentally.

C. Use a Simple Table to Compare Programs

Create a comparison table of your top programs based on the weighted score. Here is a simplified example:

| Program | Objective Score (0–100) | Culture Score (1–5) | Workload Score (1–5) |

|---|---|---|---|

| City Univ IM | 82 | 5 | 3 |

| Riverview Med | 77 | 4 | 4 |

| Lakeside Med | 71 | 3 | 5 |

| MetroHealth IM | 68 | 2 | 2 |

You are not going to obey this ranking blindly. But this is your starting point. Not “big name vs small name.” Not “my friend loved it there.”

| Category | Value |

|---|---|

| Clinical Training | 30 |

| Culture & Leadership | 30 |

| Lifestyle & Location | 20 |

| Career Outcomes | 20 |

Step 5: Use Notes to Identify Deal‑Breakers Before You Rank

Objective scores are only useful if you respect your own red lines.

You must decide ahead of time what counts as:

- Hard Stop Red Flag – Program cannot be ranked above X no matter what.

- Soft Concern – Can lower a program in your list but not automatically eliminate it.

Common hard stops I have seen applicants enforce (wisely):

- Repeated, consistent reports of malignant culture.

- Evidence of ACGME issues that leadership downplays or denies.

- Serious location issues (partner cannot work there, visa problems, etc.).

- Total misalignment with your career goals (e.g., no support for the fellowship you want).

When you review your notes, literally mark:

- “Hard Red Flag – Do not rank above Tier 2 list”

- “Hard Red Flag – Remove from list”

- “Soft Concern – Consider but can be outweighed by major positives”

Your red‑flag section in the notes will make this obvious. Unless you try to talk yourself out of it later.

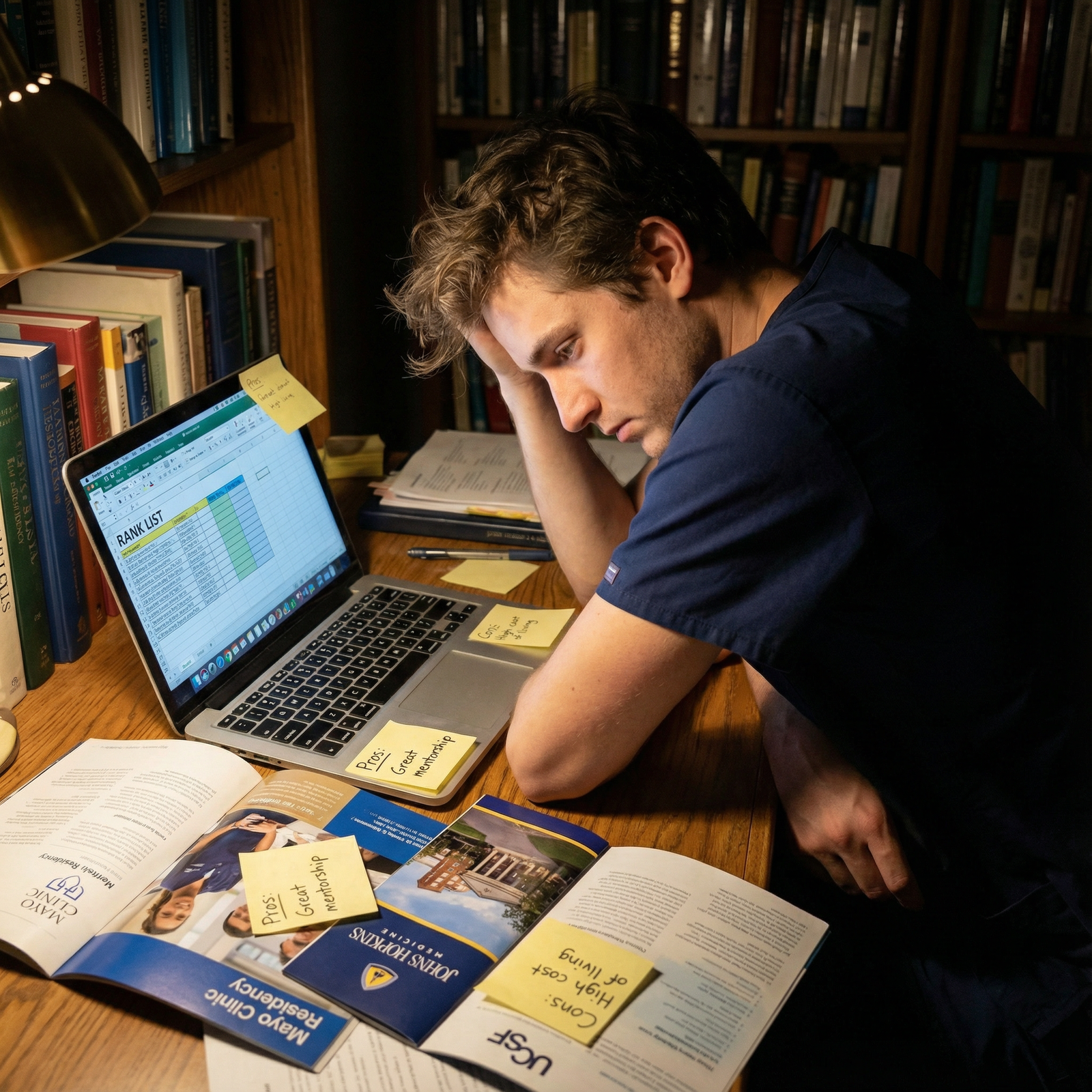

Step 6: Merge Objective Data with Your Gut – Systematically

This is where most people go off the rails. They either:

- Worship the spreadsheet and ignore the fact they hated a place, or

- Throw out all structure and go with “I had a good feeling.”

You want a third path: structured subjectivity.

Here is how:

A. Start From Objective Order

- Sort programs by your total weighted score.

- This is your “cold” preliminary ranking.

- Do not modify anything yet. Just look at it.

B. Add a “Gut Multiplier” – But Cap Its Power

Add a single subjective rating for each program:

- Gut Feel: 1–5

- 1 = I do not want to be here unless I absolutely must

- 3 = Neutral / fine

- 5 = I would be excited to match here

Then allow yourself to adjust each program up or down a maximum of 2 positions based purely on this.

This artificial constraint keeps you from completely overriding your values because the PD was charming or the free lunch was great.

Example:

- Programs sorted by score: A, B, C, D, E

- You have a very strong gut pull toward C over B → You can move C above B.

- You hated D → You can drop D below E.

You are not allowed to move E from 5th to 1st purely on vibes. If you truly feel that strongly, go back and check whether your domain scoring or weights were wrong.

C. Re‑Read Red Flags Before Finalizing

Before you lock anything:

- For each program in your top 5–10, re‑read ONLY:

- Red Flags

- Resident culture notes

- Workload/schedule notes

- Ask yourself: “If I matched here and this red flag turned out to be exactly as bad as I wrote, could I tolerate that for the length of training?”

If the honest answer is no, that program moves down. Even if the name looks good on your CV.

| Step | Description |

|---|---|

| Step 1 | Interview Day |

| Step 2 | Same-Day Notes |

| Step 3 | Score Domains 1-5 |

| Step 4 | Apply Weights |

| Step 5 | Objective Program Score |

| Step 6 | Sort by Score |

| Step 7 | Add Gut Rating |

| Step 8 | Limited Reordering |

| Step 9 | Red Flag Check |

| Step 10 | Finalize Rank List |

Step 7: Keep Everything NRMP‑Compliant and Sane

There is a paranoid myth that if you write anything negative about a program or share anything with friends, it will “get back” to the program and you will be blacklisted. That is not how this works.

You do need to be smart:

- Do not email detailed critiques to group chats that include interns at those programs.

- Do not store notes with rank indications (“#1,” “definitely ranking them first”) in shared or cloud‑shared folders with faculty.

- Do not tell programs where you are ranking them. NRMP rules still apply.

But you absolutely can:

- Take private notes.

- Discuss impressions with trusted classmates in general terms.

- Ask current residents follow‑up questions via email if something was unclear.

Also, be realistic about noise in your data:

- One overly happy resident on interview day is anecdote.

- Three separate residents quietly telling you “we are overworked and under‑supported” is signal.

Your structured notes help you separate noise from signal over multiple interviews.

Step 8: A Concrete Example – From Interview to Rank Decision

Let us walk through a short, realistic example for someone applying in General Surgery.

You interview at three programs:

- Program A – Metro Surgical Center (big city, big name)

- Program B – Riverbend Medical Center (mid‑sized city, strong clinical volume, supportive culture)

- Program C – Lakeside Community Hospital (smaller program, light call, great location)

Your post‑interview notes (simplified):

Program A – Metro Surgical Center

- Clinical Volume: 5

- Teaching: 4

- Culture: 2 (residents look burnt, mention “sink or swim”)

- Leadership: 3 (PD new, vague on changes)

- Fellowship: 5

- Workload: 1 (q3 28‑hr, frequent “staying late”)

- Location: 4

- Red Flags: Past ACGME citation, vague about fixing workload, residents hint at frequent “unofficial” hours

Program B – Riverbend Medical Center

- Clinical Volume: 4

- Teaching: 4

- Culture: 5 (residents laughing together, honest about pros/cons)

- Leadership: 4 (PD knows residents by name, specific examples of changes made after feedback)

- Fellowship: 4

- Workload: 3

- Location: 3

- Red Flags: None major

- Green Flags: Graduates matching into solid fellowships, multiple residents with kids seem genuinely happy

Program C – Lakeside Community Hospital

- Clinical Volume: 3

- Teaching: 3

- Culture: 4

- Leadership: 4

- Fellowship: 2 (limited, mostly community jobs)

- Workload: 4

- Location: 5

- Red Flags: Limited complex cases, no in‑house transplant or major onc cases

You weigh your priorities:

- Clinical Volume – 25%

- Culture – 25%

- Fellowship – 20%

- Workload – 10%

- Teaching – 10%

- Location – 10%

You calculate scores (I will summarize):

- Program A – strong volume and fellowship, but terrible culture and workload → ends up ~74/100

- Program B – strong across the board, great culture → ~85/100

- Program C – lifestyle and location great, but weaker training/fellowship → ~70/100

Objective first pass ranking: B > A > C

Your gut:

- You felt excited and safe at B – Gut 5

- Both impressed and intimidated at A – Gut 3

- Felt very comfortable but slightly underwhelmed at C – Gut 4

You allow yourself 1–2 position shifts. Maybe C edges a bit closer to A for you. But then you read the red flags at A again:

“If this culture is as bad as it seems, could I handle that for 5+ years in General Surgery?”

Your honest answer is no. Program B clearly rises to #1. Depending on your risk tolerance, A might even fall to #3.

This is rational ranking. Not “but Metro is famous.”

Step 9: Make Review Day Efficient, Not Overwhelming

When it is finally time to certify your rank list, you do not want to re‑live every single interview day. You want a focused, efficient review.

Here is exactly what to do:

Filter to Programs You Would Actually Attend

- If you would be truly miserable somewhere, do not rank it. Your notes should reveal this clearly.

For Each Remaining Program (Top to Bottom)

- Glance at: total score, culture score, workload score.

- Skim: red flags / green flags.

- Check: one‑sentence summary you write for yourself (“High‑volume, rough schedule, but amazing fellowship pipeline”).

Ask a Brutally Simple Question

- For adjacent pairs of programs, ask:

“On Match Day, if I opened my email and saw Program X instead of Program Y, would I be disappointed?” - If yes, swap them and repeat.

- For adjacent pairs of programs, ask:

Your structured notes make this painless. Without them, you will be stuck flipping through vague memories and random email chains.

Common Pitfalls You Avoid By Doing This

Let me call out the usual errors explicitly, because I have seen them every single year:

- Recency Bias – Last two interviews climb higher simply because you remember them better.

- Charisma Traps – PD or chief resident sells hard, but the underlying data (hours, attrition, fellowships) are mediocre.

- Prestige Blindness – You overweight rank, name, or what your classmates think over your own actual priorities.

- Ignoring Your Own Red Flags – You wrote “this felt toxic” in October and then convince yourself it was just you overreacting in February.

- Overvaluing Single Data Points – One resident says “it’s fine” and you discount multiple other signals of problems.

A consistent, structured post‑interview note process protects you from all of these. Not perfectly. But significantly.

Your Action Plan: Build and Use Your Post‑Interview System

You do not need more theories about “fit.” You need a protocol.

Here is your checklist:

Today (Before More Interviews)

- Build a one‑page post‑interview template with:

- Core data snapshot

- 6–10 scored domains

- Facts vs impressions sections

- Red flag / green flag boxes

- Decide your domain weights in advance.

- Build a one‑page post‑interview template with:

On Every Interview Day

- Block 20–30 minutes after the day ends for note completion.

- Fill in scores and notes before you talk extensively with classmates.

Mid‑Season Checkpoint

- Enter everything into your master spreadsheet.

- Generate preliminary objective scores.

- Adjust only if you realize a domain or weight is clearly mis‑set for your goals.

Rank List Week

- Sort programs by total score.

- Apply limited gut‑based reordering (max 1–2 spots).

- Re‑check red flags and ask the “Match Day disappointment” question for adjacent programs.

Do this and your rank order list will reflect who you are, what you value, and what you actually learned on the trail—not who had the flashiest slide deck.

Open a blank document or spreadsheet right now and build your post‑interview template before your next interview. If you wait until after three interviews, you are already behind.

FAQ

1. How detailed should my post‑interview notes be? I do not want this to take an hour per program.

Aim for 15–25 minutes total per program. If you are spending more than 30 minutes, you are probably writing narrative instead of scoring structured domains and capturing key facts. Focus on:

- Filling all domain scores

- Writing 3–7 factual bullets

- Writing 3–5 impression bullets

- Listing up to 3 red flags and 3 green flags

That is enough to distinguish programs meaningfully later without burning you out.

2. What if I change my mind about what I value halfway through interview season?

You can adjust your weights once deliberately mid‑season, but do not tweak them constantly for each program. If you realize, for example, that location and schedule matter more than you thought after your first few interviews, update your weighting system once, apply it to all existing notes, and then leave it alone. The key is to avoid changing weights in response to a specific program you are trying to justify.

3. Should I share my notes or scoring system with classmates?

Share the structure if you like, but keep your specific scores private. Your tolerance for workload, preference for urban vs suburban, or need for research support may be very different from your friends. Groupthink is real; I have watched entire friend groups talk each other into over‑ranking flashy but miserable programs. Use others for extra data points, not for voting on your priorities.