Most ERAS research sections don’t fail because of weak research. They fail because of bad wording that quietly screams “I’m exaggerating” or “I don’t understand what I did.”

You can have solid projects and still tank your credibility with a few lazy phrases.

Let’s go through the most common wording mistakes I see on ERAS research entries—and how to fix them before a PD or research-heavy faculty member reads your app and writes you off in 10 seconds.

1. Inflated Titles: “Co‑PI”, “Lead Researcher”, and Other Red Flags

If you’re not careful, your title will be the first thing that makes someone on the selection committee roll their eyes.

The classic overreach

I’ve seen all of these on ERAS:

- “Co‑PI on retrospective chart review”

- “Lead researcher on quality improvement project”

- “Principal investigator on case report”

- “Director of clinical trial data analysis”

A PGY‑4 once said during file review: “If a med student writes ‘PI’ and I know the real PI is a full professor with 200 papers, I stop trusting the rest of the application.”

That’s the problem. Overstated roles poison the well.

Why it’s a problem

- Programs know the hierarchy.

Attending PI → maybe a fellow or senior resident → students. They understand how projects usually work. - ERAS is full of puffed titles.

Many programs are already suspicious. Don’t lump yourself in with the worst offenders. - It makes you look insecure.

Confident people describe what they did, not what they wish they were.

Safer, stronger alternatives

Instead of:

- “Co‑PI” → “Student researcher working under Dr. X”

- “Lead researcher” → “Primary student coordinator” or “Primary data abstractor”

- “Director of…” → “Student lead for [specific component]”

- “Principal investigator” (when you clearly were not) → Don’t. Use “Student author” or “Student investigator”.

The key is matching your title to what you actually controlled.

| Bad Wording | Better Wording |

|---|---|

| Co‑PI | Student researcher under Dr. Smith |

| Lead researcher | Primary student coordinator |

| Project director | Student lead for data collection |

| Principal investigator | Student investigator on faculty-led study |

| Research manager | Student assistant for chart review |

If your ego is fighting this, that’s your sign you were over-titling yourself.

2. Vagueness: “Involved In”, “Helped With”, “Participated In”

The other extreme from inflation is hiding behind vague verbs that make it sound like you barely showed up.

Programs are trying to answer one question:

“What exactly did this person do, and how hard did they work?”

Words like “involved” tell them nothing. Which, in their mind, usually means: “probably not much.”

Phrases that quietly downgrade you

Watch for these:

- “Involved in multiple research projects”

- “Participated in several clinical studies”

- “Helped with manuscript preparation”

- “Worked on data collection and analysis”

- “Assisted in research activities”

- “Exposed to clinical research methods”

These all sound like filler. They don’t tell me whether you spent 10 hours or 200.

Replace with concrete, testable actions

If you can’t back it up in an interview with specific stories, you’re in trouble.

Try:

- “Abstracted data from 120 charts using REDCap”

- “Conducted 35 structured patient telephone follow‑up interviews”

- “Screened clinic schedules weekly and recruited 18 participants”

- “Cleaned dataset of 800 entries and ran descriptive statistics in SPSS”

- “Drafted Introduction and Discussion sections of the manuscript”

- “Created and piloted data collection forms for QI project”

That’s the level of detail that sounds real to a reviewer who’s actually done research work.

Here’s the test: if I asked, “What did that look like day-to-day?” and you’d struggle to answer, your wording is too vague.

3. Misusing “Author”, “First Author”, and “Under Review”

Here’s where people quietly destroy their credibility: playing games with authorship language.

The “first author” trap

If you list:

- “First author – Manuscript in progress”

- “First author – Data collection phase”

- “First author – Working on draft”

…you’re asking for trouble.

Faculty know how often “first author” musical chairs happens right before submission. Until the paper is submitted with a finalized author list, that status is fragile.

Do not use “first author” lightly, especially for:

- Things not submitted

- Vague works “in progress”

- Projects where the fellow or resident is likely to get bumped up

Better:

- “Anticipated first author – manuscript in preparation” only if your mentor explicitly confirmed that role

- Even better: just “Primary student author,” then explain in the description what you did

“Under review” vs “Accepted” vs “Published”

Another pet peeve on ERAS:

- Calling something “accepted” when it’s only “submitted”

- Listing “In press” when it’s barely past initial submission

- Labeling “under review” on something that’s still a Word document on your laptop

You will be asked: “Is this on PubMed yet?” or “Do you have the acceptance email?”

If the answer is no, you’re in dangerous territory.

Stick to accurate categories:

- Published – Has a DOI, PubMed ID, or official journal page

- Accepted – You have formal acceptance from the journal/conference

- Submitted – Manuscript truly submitted to a real journal, not just “we plan to submit”

- In preparation – You’re actively working on a draft that your mentor knows about

Don’t collapse “in preparation” and “under review” into some fake middle ground.

4. Hiding Weakness Behind Buzzwords

This is where applicants try to sound “academic” and instead sound like they copied a grant abstract they barely understood.

Words that are chronically abused:

- “Robust”

- “Novel”

- “High‑impact”

- “Groundbreaking”

- “Innovative”

- “Multicenter study” (when it’s two clinics across the street)

- “Randomized” (for anything that is not actually randomized)

- “Prospective cohort” (for retrospective chart reviews)

I’ve heard faculty mutter in review meetings: “If they call a small QI project ‘high impact’ I already know they haven’t read a single real outcomes paper.”

Fix the impulse to oversell

You do not impress anyone by calling your work “groundbreaking.”

You impress them by:

- Being accurate about the design

- Stating the question clearly

- Briefly describing your role precisely

Example of bad vs better:

- Bad: “Groundbreaking, high‑impact, multicenter prospective study looking at outcomes in…”

- Better: “Faculty‑led prospective study at two community clinics evaluating 30‑day readmission rates after implementing a new discharge checklist. I enrolled patients and performed follow‑up phone calls.”

That second version isn’t flashy. It’s credible. And credibility wins.

5. Sloppy Study Design Language: Using Terms You Don’t Understand

You want to look like you get research. Mislabeling the study design does the exact opposite.

Common offenders:

- Calling a chart review “prospective”

- Calling “before-and-after” QI “randomized”

- Labeling survey projects “clinical trials”

- Saying “double-blind” when only the patients were blinded

If a program director in a research-heavy department spots this, their internal translation is: “either careless or doesn’t understand basic methods.”

Minimum standard: Get the design right

You don’t need to be an epidemiologist. But you do need to know:

- Retrospective vs prospective

- Observational vs interventional

- Randomized vs non‑randomized

- Single-center vs multicenter

- Case report vs case series vs cohort

If you’re not sure what your study is, ask your mentor and fix the ERAS wording.

6. “Padding” the Research Section with Empty Entries

You can absolutely tank an otherwise decent application by filling ERAS with fake productivity.

I’ve seen:

- 7 nearly identical “chart review projects” that never left the Excel stage

- “Research assistant” entries where the description is basically: “Attended meetings”

- 5 variations of the same QI project broken into “idea development,” “data collection,” “analysis,” and “presentation”

That doesn’t make you look productive. It makes you look desperate.

Signs you’re padding:

- You’re breaking one project into multiple ERAS entries just to increase the count

- Your descriptions reuse the same exact phrases

- You can’t clearly explain what is different about each line item

- You’re counting “being in the room” as “research experience”

Programs are much more impressed by:

- 2–3 meaningful projects described concretely

than - 8–10 vague, nearly identical bullet points

If you wouldn’t be comfortable being grilled about an entry for 5 minutes in an interview, don’t list it as its own “project.”

7. Overclaiming Impact and Outcomes

Another common credibility killer: taking a small or unfinished project and writing about it like it changed national guidelines.

Watch for:

- “Significantly improved patient outcomes” (with no data)

- “Led to institutional protocol changes” (when the protocol is still being drafted)

- “Decreased readmission rates” (based on a tiny pilot with no proper analysis)

- “Improved patient satisfaction” (from 10 surveys and no stats)

Remember: you’re often writing for people who do this for a living. They know what real impact looks like.

Better wording for early or small projects

Try:

- “Pilot project assessing feasibility of…”

- “Preliminary data suggest…”

- “Contributed to early-phase QI initiative focused on…”

- “Findings will inform future larger-scale work”

or if you really don’t have outcomes yet:

- “Data collection underway; analysis pending”

It’s not weakness to admit the project is early. It’s honesty. That’s far more attractive than inflated “impact” statements you can’t defend.

8. Copy-Pasting Abstracts Into ERAS Descriptions

Let me be blunt: dropping a dense, jargon-filled abstract into your ERAS description is lazy and it backfires.

I’ve watched faculty scroll past whole entries because they looked like this:

“This randomized, controlled, double-blind, multicenter trial assessed the efficacy and safety of X in patients with Y. Participants were stratified based on…”

This tells me exactly nothing about you.

Two separate problems here:

- It sounds like you don’t actually understand the project well enough to summarize it.

- It hides your role completely.

What to write instead

You have two goals in the “Description”:

- One or two plain-English lines on what the project asked or did

- One or two specific lines on what you personally contributed

For example:

- “Prospective study evaluating the effect of an early discharge checklist on 30‑day readmission rates in heart failure patients.”

- “I screened clinic schedules, consented patients, performed follow-up calls at 7 and 30 days, and entered data into REDCap.”

That’s it. No need to reproduce the Methods section of JAMA.

9. Sloppy Timelines and Status Confusion

Another subtle way people undermine themselves: inconsistent or impossible-looking timelines.

Things that make reviewers suspicious:

- Listing a study as “completed” with data collection ending 3 months ago—but also claiming a published paper already exists from it

- Saying “manuscript in preparation” for a project that supposedly finished 4 years ago

- Having your role end before the project even starts (yes, I’ve seen this)

- Leaving everything as “present” because you’re too lazy to update end dates

Programs are trying to figure out:

- Did you actually stick with things?

- Did any of these projects actually go anywhere?

- Are you current and honest about where things stand?

Sloppy dates make it look like you’re not paying attention or worse, fudging.

Clean it up

For each project, ask:

- When did I actually work on this?

- What is the real status today?

- Does the timeline make sense with when things were submitted/presented/published?

If something stalled, you don’t have to hide it. But don’t pretend it’s ongoing if you haven’t touched it since M2 year.

10. Weak or Cringe Writing Style in Descriptions

You’re applying for a professional role. Your ERAS entries shouldn’t read like a motivational poster or a college club flyer.

Phrases that make seasoned reviewers wince:

- “I gained invaluable experience…”

- “This taught me the importance of teamwork and leadership.”

- “This project allowed me to merge my passion for research with my clinical interests.”

- “An amazing opportunity to give back to the community.”

That’s personal statement fluff, not a research description.

Strip the fluff, keep the substance

Your research description is not the place to:

- Talk about how “rewarding” it was

- Explain how it “confirmed your desire to go into X”

- Insert your “passion” for the 19th time

Focus on:

- Objective project details

- Your actual responsibilities

- Concrete outcomes: abstracts, posters, talks, publications

You can always connect the dots during interviews when they ask, “What did you learn from this project?”

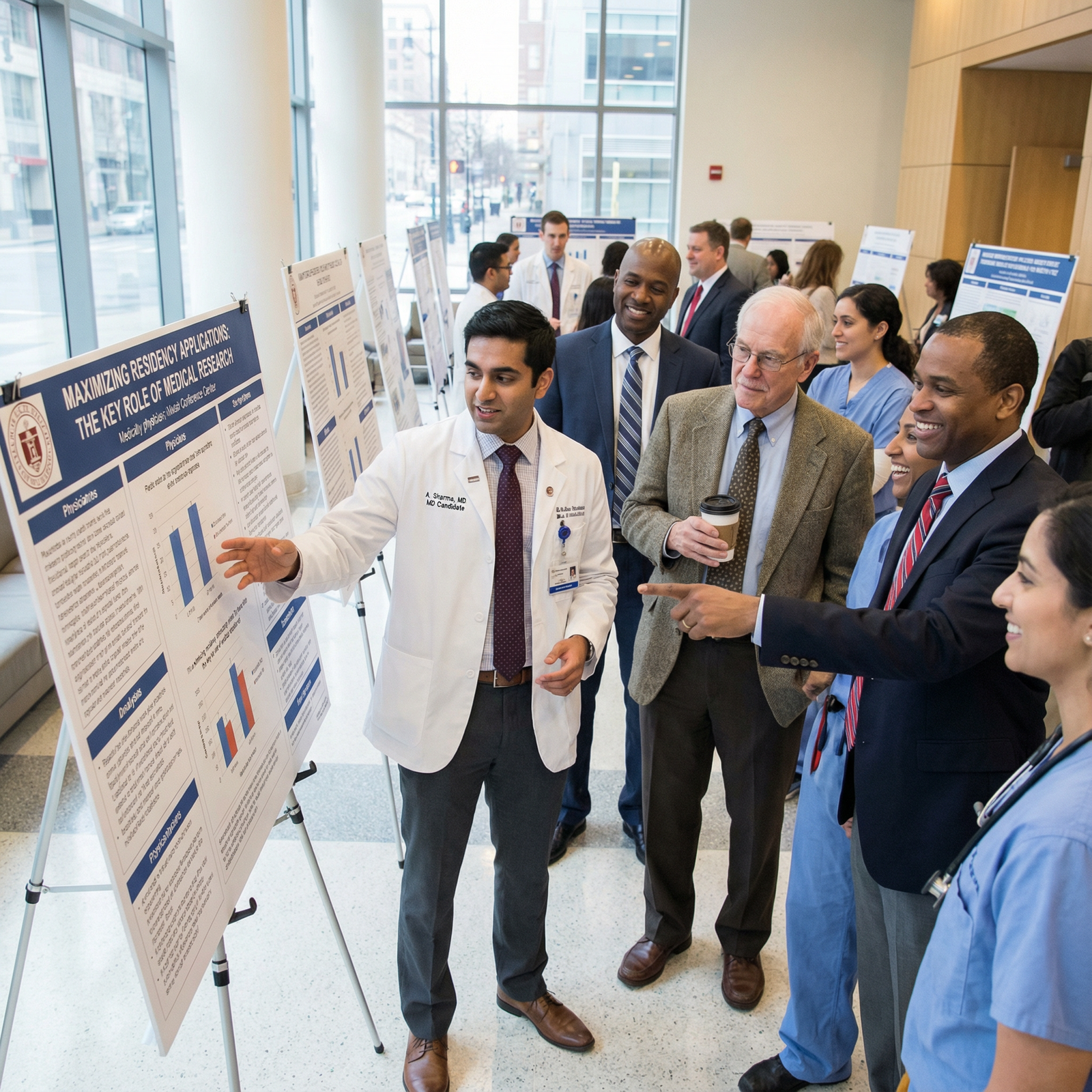

11. Ignoring the “Can I Defend This in an Interview?” Test

This is the final and most important filter.

Anything you write on ERAS might become an interview question. If your wording even slightly misrepresents reality, you’ll feel it the second they say:

- “Tell me more about this study—what was your role?”

- “What were your main findings?”

- “Why did you choose that study design?”

- “What changed at your institution because of this QI project?”

If you find yourself rehearsing complicated justifications in your head to defend your wording, that’s your brain telling you the entry isn’t honest or clear enough.

Clean entries pass this test easily:

- You can explain the project in 2–3 plain-English sentences

- You can list your responsibilities without squirming

- You’re not praying they don’t ask about “first author” or “randomized” or “multicenter”

Before you finalize, read every research entry and ask:

“Would I be completely comfortable answering detailed questions about this tomorrow?”

If the answer is anything but yes, fix the wording.

12. A Simple Framework to Rewrite Your Entries Safely

When you’re correcting your ERAS research section, use this structure for each entry:

Accurate Project Label

- “Retrospective chart review on…”

- “Prospective QI project on…”

- “Case report describing…”

One-Sentence Study Aim in Plain English

- “We examined whether X was associated with Y in Z population.”

Your Role (Specific and Concrete)

- “I [collected data / screened charts / ran statistical tests / created survey / drafted introduction, etc.].”

Realistic Status and Outcomes

- “Presented as poster at [Conference, Year]; manuscript in preparation.”

- “Abstract accepted to [Meeting].”

- “Data collection ongoing; aim to submit abstract to [Meeting].”

Leave out the ego-boosting fluff. Put in the boring-but-true details. That’s exactly what faculty respect.

| Category | Value |

|---|---|

| Inflated Titles | 30 |

| Vague Roles | 25 |

| Buzzwords | 15 |

| Status Games | 20 |

| Design Errors | 10 |

| Step | Description |

|---|---|

| Step 1 | Start Entry |

| Step 2 | Name Project Type Correctly |

| Step 3 | Write 1-line Aim in Plain English |

| Step 4 | Describe Your Role Specifically |

| Step 5 | Assign Honest Status |

| Step 6 | Finalize Entry |

| Step 7 | Comfortable Defending in Interview? |

13. When in Doubt, Understate—You’ll Win in the Room

Here’s the pattern I’ve seen over and over:

The applicant who slightly underplays their role?

Often ends up impressing the interviewer when they dig in: “Oh, you actually did a lot more than you wrote.”The applicant who oversells or gets cute with wording?

Loses trust fast. Once a reviewer doubts one entry, they start doubting everything.

Your goal is not to look like a research superstar on paper. Your goal is to look credible, consistent, and honest—so that when someone who actually does research reads your ERAS, they think:

“This person knows what they did. They didn’t inflate. I’d trust them on my team.”

That’s the win.

Key Takeaways

- Stop inflating. Drop fake titles, exaggerated impact, and buzzwords. Call your role and your study design what they actually are.

- Be specific, not vague. Replace “involved in research” and “helped with” by concrete tasks and clear contributions.

- Write for someone who’ll question you. Assume a skeptical faculty member will ask about every word. If you can’t defend it comfortably in an interview, rewrite it now.